Last year, Microsoft announced Teams Intelligent Cameras, a feature for Microsoft Teams Rooms that purported to use AI (artificial intelligence) to improve the meeting room video experience for remote attendees.

Intelligent Cameras originally promised to deliver “an equitable meeting experience” using unique technologies: AI-powered speaker tracking, with multiple video streams to place in-room participants into their own video frame along with people (face) recognition.

In the announcement, Microsoft promised to use facial movements and gestures to understand who is speaking, and use facial recognition to group people’s names in the meeting roster – all using Microsoft’s technology to deliver.

Unfortunately, whilst Microsoft promised these features – Teams Intelligent Camera has launched to GA (General Availability) and under-delivers on the promise and execution. This is bad news for everyone buying Microsoft Teams Rooms.

What has Microsoft announced?

The GA announcement from Microsoft at Inspire 2022 re-framed Intelligent Cameras as being delivered in partnership with select OEM vendors in the Microsoft Teams Blog post covering the details.

The big change is that now Intelligent Cameras “harness OEM designed AI capabilities” from Logitech, Neat, Jabra, and Poly. That’s the same set of OEM-designed features Microsoft announced in July 2021 would be coming to Teams by the end of 2021, separately from the Intelligent Cameras feature.

Let that sink in for a minute; Microsoft has gone from aiming to “deliver an equitable experience” using “Microsoft AI facial recognition technology” that they will make “available to OEMs”… to grouping people framing capabilities from several vendors under a single banner.

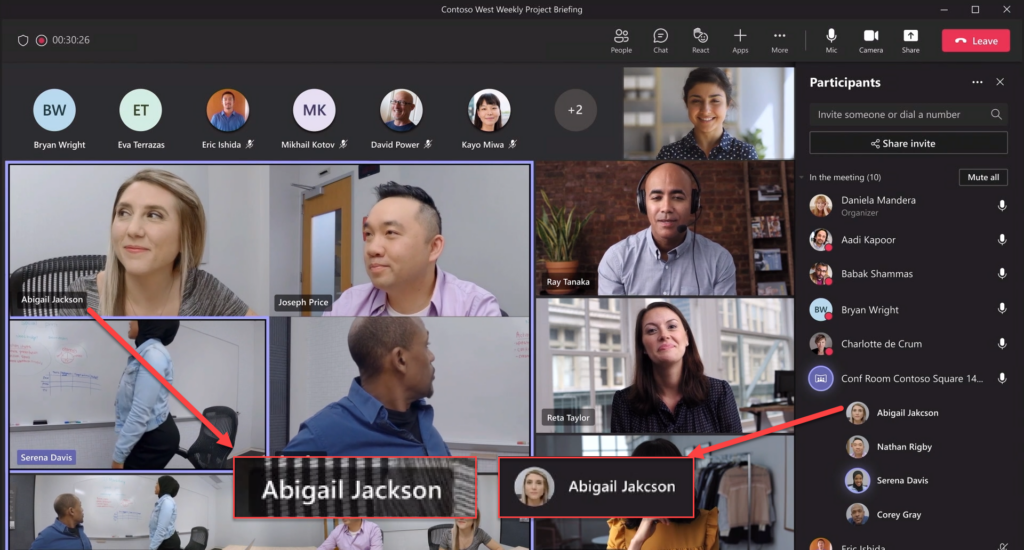

And – whilst Microsoft announced it as GA at Inspire – the headline core feature to show multiple video feeds for each in-room attendee isn’t expected to arrive for “some months”; with no word of when person recognition will arrive.

It clearly isn’t GA, as the documentation doesn’t appear to be available (at the time of writing) and might not even be advanced enough to show in beta, as the demo video is a mock-up; given away by the mis-spelling of a name in the meeting roster:

Reading between the lines – what Microsoft is saying is if you have bought a kit such as Neat Symmetry, then you can use its feature “Auto Framing” with Teams, just like you can with Zoom, under the banner of “Intelligent Cameras”.

Microsoft should have kept the feature inside Teams

Despite what meeting room vendors would like you to believe, auto-framing and grouping of people isn’t a ground-breaking innovation.

If you’ve used a Snapchat filter or one of many apps on a phone that detect a face and its landmarks (the key points on a human face), and then overlay it, then you’ve used AI at work in real-time. Chipset vendors like Qualcomm have been including AI-accelerators on their system-on-a-chip for years, allowing developers to use trained models in their applications and deliver extremely fast and capable facial recognition.

And if you’ve used Teams with Together Mode – or even background blur on a PC, then you’ve also seen similar technology at work; Teams need the AVX (or AVC2) extensions to detect the person, their face, and their facial outline so that the background can be replaced. Face detection (what’s needed to determine where people are) can be determined in ~3-5ms even with an elderly CPU.

Simply put, Microsoft could have delivered the feature in-house, and it would have provided all MTR devices with the capability in a consistent way.

OEM vendors’ solutions aren’t very intelligent

Most OEM vendors of Microsoft Teams Rooms are providing last-generation solutions (i.e. not as good as an app on your phone or a modern laptop) for face detection to support Intelligent Cameras.

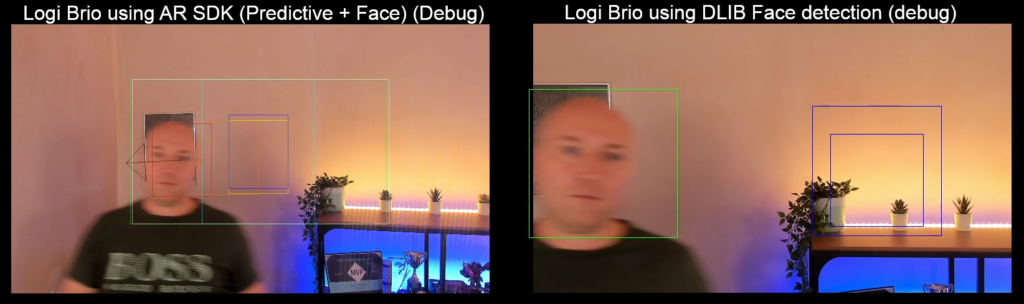

Take, for example, a Microsoft Teams Room built to the “Enhanced Microsoft Teams Room” standard from Microsoft; in the example below we see that the vendor camera can’t match auto-framing using open-source libraries or basic examples using AI acceleration.

I’ve used one of the cameras on Microsoft’s list, which was also a vendor listed in the September 2021 announcement.

You’ll see the auto-framing and speaker tracking features on the MTR vendor device are extremely slow, in comparison to the AI-predictive tracking using the Nvidia AR SDK and basic face detection and tracking using the open source Dlib library. Even the CPU-based Dlib managed nearly as well as the GPU model, even though the CPU (of a similar model to an MTR) was processing the three 4K video streams.

Although my example is slow, it doesn’t show the worst of how the vendor solutions work.

Using a similar kit last week, I ran a hybrid event for executives of an international company. Everything went mostly to plan… apart from the slow speaker tracking – and at one point, the vendor face detection functionality misrecognized the projector as a face – it had two mics on top and looked a little like the robot from Short Circuit – much to the hilarity for the remote attendees.

How Microsoft should have delivered Intelligent Cameras

The lesson from that is don’t leave it to the vendors to deliver the feature. The $1000+ cameras provide worse tracking than a $100 Brio using software-based face detection to crop video feeds. The Nvidia GPU – similar to what is available in many laptops – provides group-based framing – i.e. the functionality necessary to deliver Intelligent Cameras separate feeds – as part of the SDK.

What Microsoft should have done is allowed the vendors to offer their own innovations, but for Microsoft Teams, provide a consistent experience.

Rather than slice video feeds up at the camera, vendors should have provided the metadata so that this could be performed either by the Microsoft Teams service layer – using a similar method to Together mode to take each person from a video feed and compose them as individual frames. Even if Microsoft considered it too costly to do so, they could have performed the cropping client-side against the full video feed, delivered at 4K if needed.

Ultimately the feature is taking a single camera feed and then splitting it into multiple streams. Taking the source video feed, along with the metadata highlighting the location of each face allows the decoded video to be cropped and arranged easily. In the example below, you’ll see the metadata shown as boxes against the real-time feeds. As you can see, apart from the predictive data (i.e. the arrow near my face on the left, showing where it thinks I’m moving to), it’s effectively the co-ordinates of each person:

Then, Microsoft could have either loaded a pre-computed registered faces model based on the meeting roster into the source MTR to process, or used the Azure Face API. The recognition function is the piece of the puzzle that determines who the detected face is.

Naturally, to make it simple, only the attendees of the meeting would be included in the model so that two twins who work for the same company but in different departments wouldn’t be shown as false positives.

Because the recognition doesn’t need to be against each video frame, it doesn’t need acceleration or offloading to a cloud service like the Face API. Using the AVX2 extensions on the MTR, you can perform face recognition at the same time as encoding/decoding video streams, real-time face recognition, and running a UI in around 1-2 seconds; these can then be tagged in the metadata against each detected face, allowing a meeting room with around 10 people to have a fully tagged roster in around ~20 seconds. If vendors used the capabilities in the modern ARM system on chip devices they should be putting into cameras and MTR on Android devices – it could be around 50 times faster, even on the cheapest CPUs.

Is there a good reason why Microsoft offloaded responsibility for Intelligent Cameras?

Microsoft hasn’t shared why they’ve changed direction – and naturally, it is their decision to do so. But – it would be interesting to understand why. Was it a fear of a misrecognition causing fall-out (similar to Tay Bot), or perhaps the OEM vendors pressuring Microsoft, so that you need to buy new equipment to use the functionality?

We’ll possibly never know, but what we do know is that Intelligent Cameras has a lot of potential – but only if Microsoft delivers the feature as a first-party feature included in all Teams Rooms. Only then can they provide an equitable meeting experience to customers who’ve already invested heavily in Microsoft’s Teams Room systems.

Steve! Great post, lots of similar thoughts to what I have had over the past couple years as a Teams room junkie.

There’s a blog post out today you might want to look at:

https://techcommunity.microsoft.com/t5/microsoft-teams-blog/what-s-new-for-microsoft-teams-rooms-teams-devices-and/ba-p/3631513

Looks like the feature is now going to be called “IntelliFrame” and they’re going for a hybrid approach, so even if you don’t have the advertised OEM stuff, Teams will still be able to do it:

“In Teams Rooms outfitted with a camera capable of running advanced OEM AI features, remote attendees see an IntelliFrame view of the room’s participants. Poly, Logi, Jabra, and Neat are partners with this technology available this year.

For Teams Rooms equipped with all other existing camera types, IntelliFrame delivers an enhanced video gallery experience by leveraging the latest Microsoft-built AI models running in the Microsoft cloud, which process the video stream from the room. This experience will be available in early 2023.”

Would love to know your thoughts!

Very well pointed out!

Isn’t this a classic example “you don’t know, what you don’t know yet” ?

Indeed MSFT was quite flexible with their definition what a smart camera is, and where the smartness should be?

* In the OEM’s camera like in a huddly camera having a mighty Intel Movidius Myriad X chip?

Good for the OEM, but hardly in the interest of MSFT, right?

* In the local client

There should be already enough CPU/GPU juice in every MTR on Windows but how about MTR on Android?

Their Smartdragons can do amazing things (as your TikTok example shows) but are the OEMs smart enough to build something comparable?

* Back in the cloud? Sure this would be their wet drean, that everybody must use (and pay for?) their cloud services.

I am by far not smart enough if such an approach is feasable without having some healthly dose of onPrem power.

Being equally at home in MS Teams, Zoom and Webex Room Systems I find it sometimes even hilarious to see how they watch each other, claim that there version is much better and just to see adopting the other guy’s solution when the initial dust settled.

Likewise AUDIO is similarly interesting but here the old paradigm is still valid: VC vendors mostly have no clue about audio so it is no wonder, that there is much less innovation there. Although, audio needs way less cpu juice, so would be much easier to do smart things. OK, I’ll stop here…

Regarding the MTR on Android – I struggled to find specifications on what each MTR on Android vendor puts inside the kit – if you have found out a good public source, I’ll gladly look. Having a collection of various ARM-based single-board computers – some with AI-coprocessors and some without, there’s a variety of options other than the Snapdragon chips.

I’ve been learning about the underlying technology – the Intel Movidius Myriad X (in the Intel NCS2 USB form) is hanging out of one of my lab devices, and are fairly useful for testing OpenCV. The Google Coral equivalent is hanging out of another lab device and represents (somewhat) of replicating the capability to do this stuff on a higher-end Snapdragon SOC. The price of these – even in USB-stick form is low enough that every Teams certified room camera should have this capability, rather than it being a premium feature; but frankly a $5 ESP32-AI MCU can perform face detection in real time using compiled code, and older ARM CPUs (32-bit or 64-bit) or 10 year old Intel x64 CPUs can do so, too.

Excellent analysis. After testing the Poly E70 for a week we gladly packed it up again and returned it to the vendor. For almost $3k we simply expected more. It’s a shame Microsoft dropped the ball on this.

What has made me laugh/cry has been the vendor view – “Let’s do a demo of our kit” – and you’ll see a device – maybe like the one you mention – doing face detection and the vendor rep will use *cardboard cut outs of people* to “prove how well it works”. At the same time, I’ve seen customers who have added “our people” picture walls in meeting rooms which naturally, confuse these devices. Yet… they wouldn’t confuse, say, your Surface Pro, or Kinect on an XBox 360.

I’ve NOT tested the E70, but suspect because they didn’t put the cameras side-by-side, so that it could infer three dimensions, it would have the same issue; unless they are actually performing much more complex operations, such as attempting to track eye landmark movement such as blinking. I do love that the Poly E70 states it has 20 megapixel dual 4K sensors; which as 4K is 8.3 megapixels is a little confusing.. are they adding together the sensor combined count to make it sound better?