Sometimes the universe hands you exactly what you need at exactly the right moment. After spending years helping organizations navigate the complexities of Microsoft 365 and watching the rapid evolution of AI assistants, I’ve been increasingly curious about running capable AI models locally. Not because I don’t trust cloud services—though there are certainly valid reasons to be cautious—but because there’s something appealing about having your own AI assistant that doesn’t require an internet connection and doesn’t send your queries to someone else’s servers.

That’s where Jan comes in. If you haven’t heard of Jan yet, think of it as the open-source equivalent of Claude Desktop or similar AI chat applications, but with one crucial difference: it runs entirely on your local machine. Combined with the right tools, you can create a powerful, privacy-focused AI assistant for your Microsoft environment.

Why Local AI Makes Sense

Before we dive into the technical setup, let’s talk about why you might want to run AI locally in the first place. There are four really common concerns about cloud-based AI services:

- Data sovereignty and control: When you send a query to ChatGPT, Claude, or any other cloud-based AI service, your data travels over the internet and gets processed on someone else’s infrastructure. For many organizations—especially those in regulated industries—this raises legitimate questions about data privacy and compliance.

- Internet dependency: Cloud AI services require a stable internet connection. If your connection goes down or if the service experiences an outage (and we’ve all seen how those can cascade), you lose access to your AI assistant entirely.

- Cost control: Subscription costs for premium AI services can add up, especially for organizations with multiple users. Today when you pay (e.g) Anthropic $20/month, they’re paying their compute provider much more than that for the compute you concern, and the compute provider is paying way, way more than that in hardware capital expenses. The AI providers are artificially subsidizing us all and that can’t continue forever.

- Customization: With local AI, you have complete control over the model, its behavior, and how it integrates with your existing tools and workflows.

Understanding the Model Context Protocol

Without going into a full, boring explanation of what the Model Context Protocol (MCP) is, the way to think of it is that it’s like USB-C. MCP servers connect to specific data sources (such as GitHub, Microsoft 365, Figma, or whatever) and provide tools that LLMs can load and use. The LLM calls tools based on the prompts that you give it. Each MCP server can list the tools it has, and the server authors can provide context to help the model know which tools to call. For example, one of the tools I wrote for work has this description: “Create and start an email restore job for the selected set of users. Restores all items (calendar, tasks, email, etc) from the most recent snapshot.”

There are two ways that an MCP server can communicate with the outside world. One uses HTTP. Servers using this protocol are typically called “remote MCP” servers. The other uses standard text I/O on your local machine only (“local MCP”). Of course, a local MCP server may still connect to other resources over the network, but remote MCPs have the advantage that you can often use them without having to install anything locally.

There are many MCP servers available from marketplaces like mcp.so; if you spend a few minutes browsing there you’ll see a wealth of options. Microsoft has its own set of MCP servers, including tools for creating and managing Azure objects, working with Dataverse data, and querying the huge Microsoft Learn documentation set.

The beauty of the combination of MCP and a local LLM is that most of the work’s done on your machine. Of course an MCP server may retrieve data from the cloud, but the queries and results in your session don’t, and there are many MCPs that can perform local operations in a browser or filesystem.

Setting Up Your Local AI Environment

The Jan installation process is refreshingly simple compared to some other AI solutions I’ve worked with. Head to the Jan.ai page, download the appropriate version for your operating system, and install it like any other application.

Once Jan is installed, you’ll need to download a model. The Jan application will lead you through this step. For initial testing, I recommend starting with the Jan-v1-4B-Q4_K_M model. It’s reasonably capable while being small enough to run efficiently on most modern hardware. You can always experiment with larger, more capable models later once you’ve confirmed everything is working correctly.

After installing Jan, take a moment to verify that it’s working properly by asking it a simple question—something like asking for the average distance between Earth and the moon. This baseline test ensures that your local AI environment is functioning before you add the complexity of MCP integration.

Enabling MCP Integration

Here’s where things get interesting. By default, Jan doesn’t attempt to load MCP servers at all—it’s a feature that needs to be explicitly enabled. This makes sense from a security and simplicity perspective, but it does mean a few extra configuration steps.

Open the Jan client and navigate to Settings in the lower left corner. Select MCP Servers and you’ll see the list of default MCP servers that Jan supports, including serper for web searches and Filesystem for querying, reading, and writing local files.

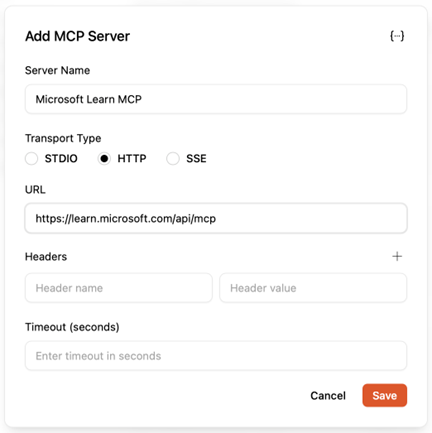

Let’s add the Microsoft Learn MCP server. Click the small “+” icon next to it to add a new MCP server configuration. When the Add MCP Server dialog appears, fill it out as shown in Figure 1, then click Save. There’s nothing to load or install because this particular MCP server is hosted remotely.

Testing Your New Server

Now you can try a simple test. Click New Chat in the lower-left side of the Jan window, select a model (which is easy; you’ve only got one at this point), and try a query such as “Search Microsoft Docs and summarize the steps required to set the preferredDataLocation for a SPO site”. By default, Jan will always ask you for permission to use a tool, so click Always Allow when the tool permissions dialog for the Learn MCP server appears.

After entering your query, you’ll see the model start to do its work. It will go through various stages of “thinking” and querying the tools you’ve specified before producing its results. How long this takes depends on the hardware you’re running on. On my Mac mini M4, it took about a minute. This is quite a bit slower than it would be with a large cloud model like Claude Sonnet or ChatGPT 5, of course, but since it’s running locally, that’s to be expected. (Note that you can configure Jan to use cloud models if you want to, too.)

One technical detail that caught me off guard initially: you may sometimes see complaints that the model is out of context, meaning (basically) it’s run out of space for processing results. Setting it to 0 allows the context size to be loaded directly from the model, which seems to work much better than trying to configure it manually. When I tried setting specific context values, I encountered various errors that disappeared once I switched to the automatic configuration.

The Practical Benefits

Querying the Microsoft Learn documents may not seem that useful; after all, you can do that from a browser. However, since VSCode can use MCP servers, now imagine being able to query those docs from your programming environment… that’s quite a bit more useful! The real value comes when the LLM is able to string together a sequence of operations. For example, if you use the excellent Lokka extension from Microsoft’s Merill Fernando, you can query any object in a Microsoft 365 tenant through Microsoft Graph. This opens up a huge range of possibilities because you can use the familiar “query -> filter -> action” pattern we’re all used to from PowerShell with many more non-Microsoft objects and services, in natural language, from your local machine. All of this can be done while maintaining complete privacy and data sovereignty.

Looking Ahead

The local AI landscape is evolving rapidly, and tools like Jan represent an important alternative to cloud-based services. While the setup process currently requires some technical know-how, I expect these tools to become more user-friendly over time.

For organizations that prioritize data privacy, have concerns about internet dependency, or simply want more control over their AI tools, the combination of local MCPs and local LLM clients like Jan offers a compelling solution. As the performance of both models and hardware improves, this will become even more useful.

In a future Practical AI column, we’ll explore how to use Lokka from within Jan to help you query and manage your M365 tenants, and you’ll see that the combination of access to Azure, Entra, Microsoft 365, documentation, and local tooling gives you an incredibly powerful set of capabilities that you can’t get through traditional scripting, command-line interfaces, or GUIs.

As someone who’s spent years helping organizations navigate the balance between innovation and security, I find this approach particularly appealing. It lets you experiment with advanced AI capabilities while maintaining the kind of control and privacy that many organizations require.

The future of AI assistance doesn’t have to be entirely cloud-based. Sometimes, the smartest move is to keep your smartest tools close to home.