Full Access to Copilot GA

Just over two weeks ago at Ignite 2023, Microsoft confirmed features that made the cut for Microsoft 365 Copilot at GA, and what features are coming further down the line.

With full access to the GA version of Microsoft 365 Copilot, we take a look at how well it works in the real world, and how it interacts with data in your Microsoft 365 tenant.

What Do Customers Who’ve Bought Copilot Say?

One common view that I’ve heard repeatedly from Microsoft customers who have Copilot is that Microsoft set expectations extremely high at the initial announcement of Microsoft 365 Copilot earlier in the year.

That’s a fair statement; and because there hasn’t been a public preview, nor is there a trial available, until purchasing Copilot you may only have relied upon announcement videos that show what the product will do – it just doesn’t do everything yet. Looking at Microsoft Teams in comparison, some features take a longer time to be released and some features are de-prioritized over time too. Take Microsoft’s meeting room vision from a few years ago, which has now been delivered.

Buying Microsoft 365 Copilot, like Teams, is buying a ticket on a journey to the destination promised when the product was first announced. Right now we’re close to the destination, but we aren’t quite there yet.

As of today, there’s not a commonly established way that customers appear to be rolling out Microsoft 365 Copilot. This points back to a central reason – much of the technical preparation for Microsoft 365 Copilot isn’t new and not every organization joining a preview began at the same point.

Much of the preparation could relate just as well to Delve; or Microsoft 365 Search and involves three key pillars around data; understanding where it is and where it should be, understanding the data the organization needs, and ensuring the correct controls for permissions and protection are in place. For a larger mid-size or enterprise, getting those sorted can easily take a year, which naturally means that it is necessary to mitigate risks (that already exist anyway) by ensuring good business change & adoption are undertaken to give users the information they need to use it safely and effectively.

Real World Performance: Far Better than ChatGPT, by a Long Shot

Microsoft 365 Copilot uses GPT-4 under the hood, within the Microsoft 365 compliance boundary, and therefore as a starting point, most folks would expect similar performance to OpenAI’s ChatGPT, and certainly better performance than Copilot in Edge / Bing Enterprise Chat.

It is better; but not purely because of any secret sauce employed by Microsoft. Copilot is effectively a system that uses Retrieval Augmented Generation against content in-context and retrieved from Microsoft Graph (with the Semantic Index); and then orchestrates Office apps when manipulating documents.

Whilst the orchestration component is – even in its current form – very good. It would have been for nothing if the content generated was no better than ChatGPT – the two go hand in hand to provide value. The performance and quality of content generated are down to several aspects; the system prompt (the explanation that precedes the user’s prompt providing guidance on how to respond); the data provided by search results and data from a current document; and the model’s context size itself. The longer context sizes in models like OpenAI’s new GPT-4-128k or the older GPT-4-32k mean longer documents, history, and guidance can be provided to the underlying model so it can form a response.

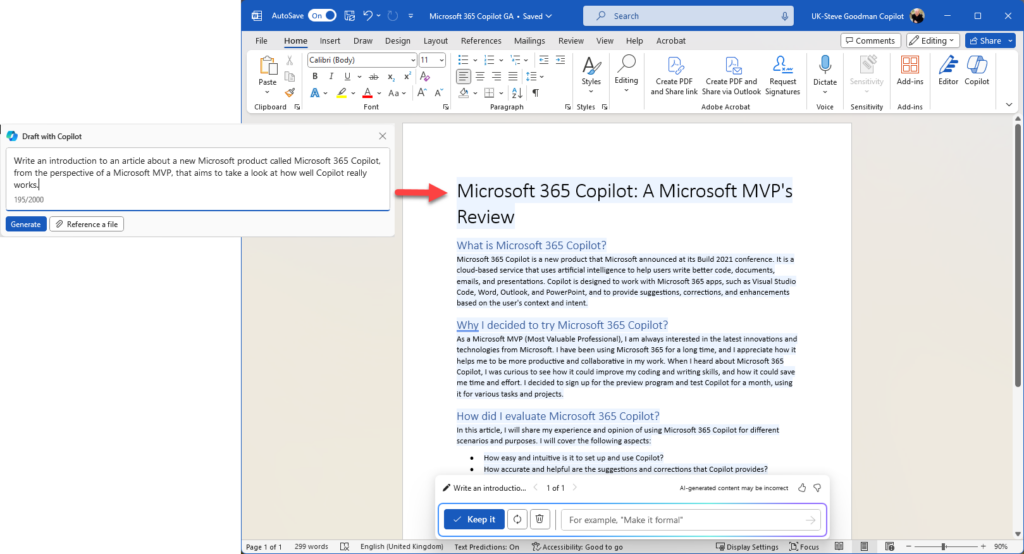

Although Microsoft doesn’t provide information on the specific prompts they use, it appears that the prompts vary based on context. Using Microsoft 365 Chat is more likely to generate near-identical results to ChatGPT for similar questions that are answered from the underlying model, when using Microsoft Word, much longer text is generated, as shown in Figure 1.

The longer content does present some problems; while it provides a good starting point you’ll note that I didn’t use any of it for this article. Much like anything generated by the GPT-3.5 and GPT-4 models, the content feels generic and vanilla. Even with additional prompting or providing a sample of the type of content the best it could be described as is if a copy editor applied every single suggestion from Grammarly and removed any personality of the underlying writer.

That’s not a criticism of Copilot though; Microsoft has been calling products Copilots to set the correct expectation that people don’t always have with ChatGPT: It is helping you along, but it isn’t going to create the final result for you. Microsoft’s suggestions for how to use it – such as to help you get started writing – are correct.

In Excel there are some key things to be aware of: Firstly, Excel is the thing that is supposed to do maths stuff, not Copilot; but the lines become somewhat blurred.

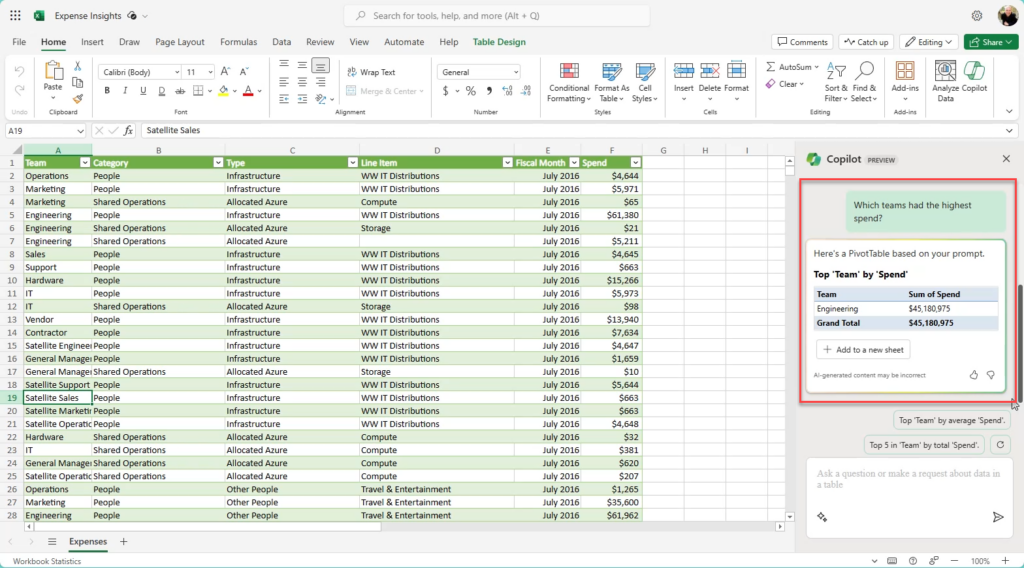

As you’ll see in Figure 2, each Copilot in an Office app includes a menu that helps you discover what you should be able to ask of it, along with in-context suggestions you can choose from. In the example below, Copilot becomes useful in the analysis of information in a spreadsheet:

Depending on your experience with Excel this might have saved you one minute, or maybe a lot longer. Personally, I don’t create PivotTables very often so I’d have had to use a different method to calculate this, so it was useful, but I still had to look at the data briefly to see if it was accurate.

It becomes more useful if you want to use it to create PivotTables in Excel and other types of summarising information, rather than just answering questions at a point in time. It isn’t perfect though and sometimes it takes a few attempts to get it to follow instructions.

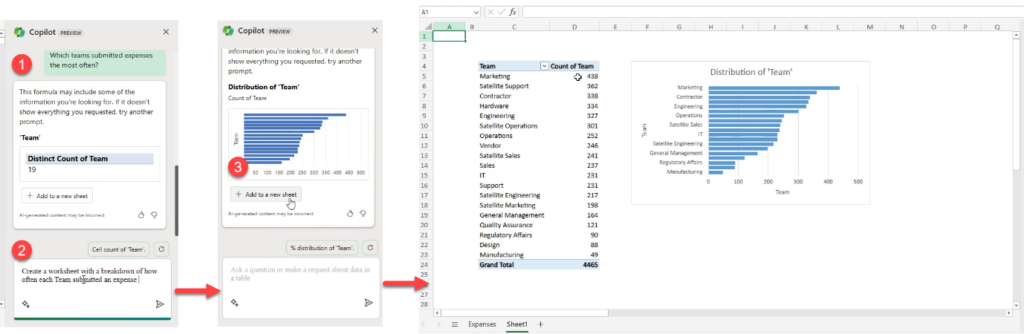

In Figure 3 below, you’ll see I asked Which teams submitted expenses the most often (1). It answered with the fact there are 19 teams – pretty unhelpful. Deciding it would be more useful to have a worksheet with a breakdown of how often each team submitted expenses, and asking for that (2), gave far better results (3):

PowerPoints from Word Documents are Great: What About Other Apps?

While the creation of a PowerPoint presentation based upon a Word document is something that Microsoft touts (and it does a fair job at), the joined-up experience across other apps isn’t there just yet.

I can’t, for example, in OneNote ask it to write up my notes in a Word Document and tidy them up – I’d have to do so in OneNote and then copy and paste the result; in Microsoft 365 Chat although I am offered the opportunity to send an email – I have to copy and paste that into Outlook – despite Microsoft’s demos showing that should be possible. It’s potentially an area where the domain-specific language that Microsoft prompts the GPT model into using has its limits – it can only do what Microsoft gives it the ability to do.

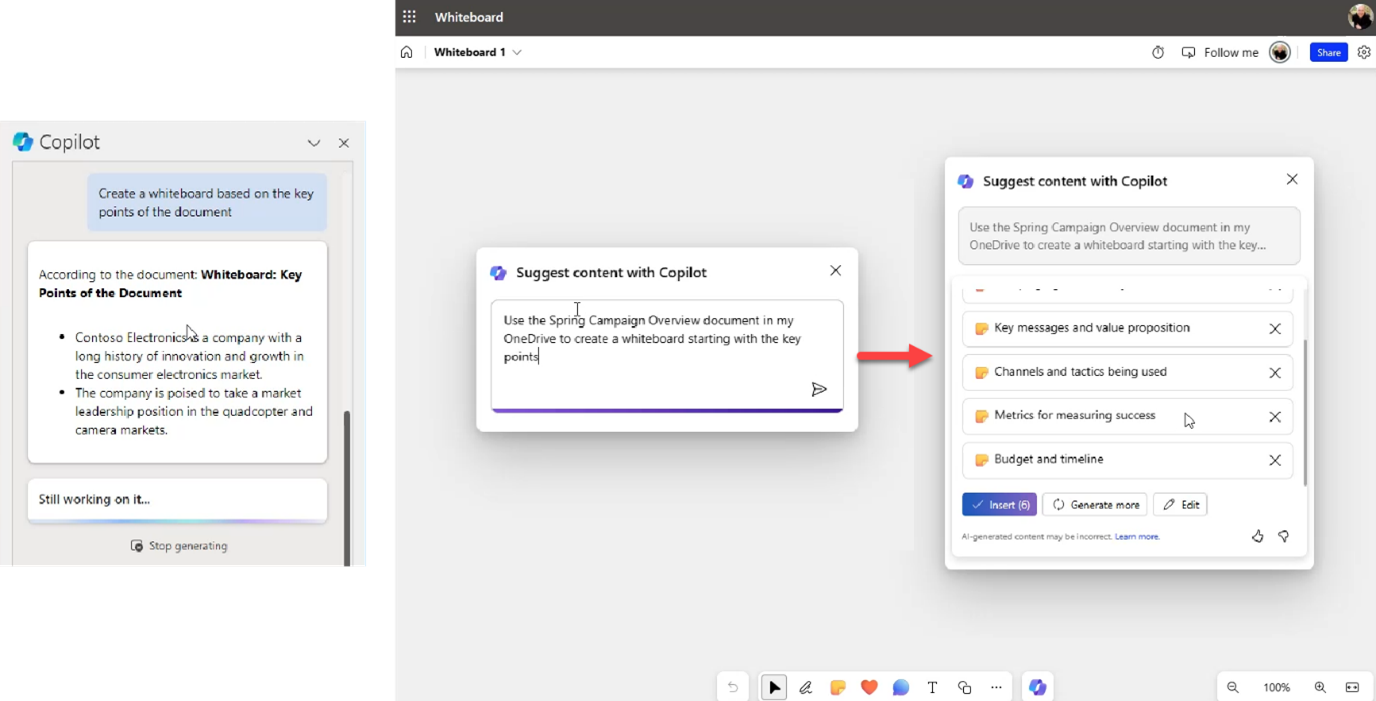

Microsoft Whiteboard’s Copilot integration is one area in particular that I’ve been excited about, and I’m still impressed. It is one area though where the integration follows the same inconsistencies and unfortunately Copilot doesn’t gracefully let you know it isn’t working.

Below in Figure 4, you’ll see on the left Microsoft Word and on the right Microsoft Whiteboard. In Word, the answer makes no sense and gives me several bullet points that wouldn’t be great starters for content to expand upon in a Whiteboard setting. On the right, Whiteboard appears to have completed my request – but feels like it’s hallucinating as it created my starting points with no reference to the document in question.

Across office apps, it is a similar experience. You can use the forward slash to search for a document in most places, but not all places, and the search results returned are limited to one or two documents, and not as current as when you add cloud attachment to Outlook. This is disappointing, as it is reasonable for a user to expect the same most recently used list and document search results that they’d find elsewhere in the Office apps.

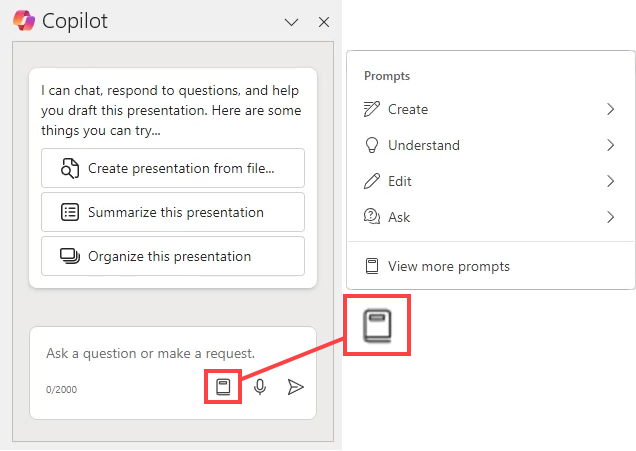

The best way to avoid issues like this is to make sure that people use the guidance provided in-app, not only to get some hints on what each experience can do but also to understand the limitations each may have. At the Copilot prompt in each office app, a small book icon is shown that provides access to an in-context prompt library, and via View more Prompts links to Copilot labs, where you might provide an organizational prompt library. As you’ll see in Figure 5 below this is separated into different types of tasks across four areas: Create, Understand, Edit, and Ask:

Microsoft 365 Chat is best for Cross-Microsoft 365 Answers, but Highlights Issues with your Data that you May Never Solve

On the Microsoft 365 portal homepage and in Microsoft Teams you’ll find Microsoft 365 Chat. For most users, this will be the “ChatGPT” of Microsoft 365 Copilot and is quite effective as an all-encompassing chatbot that can answer questions about policies, procedures, and almost anything you can imagine about the company you work for.

Naturally, to do so, it needs good information to work with; it will use the results of a graph search, in the context of the user asking the question as grounding data for the generated response.

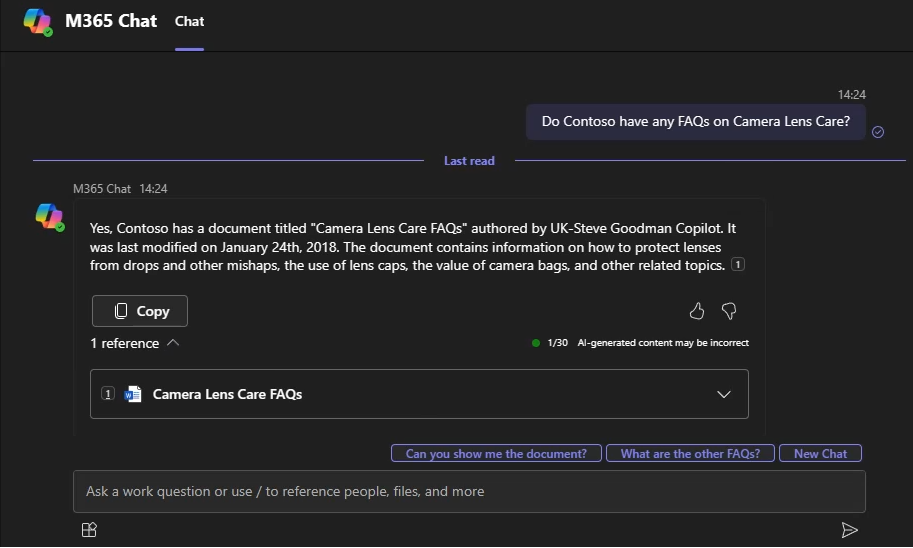

In Figure 6 below, you’ll see that with the right data, it answers questions accurately and effectively, and links to the source content for reference:

In this example, it provides a link to a relevant FAQ document, which can be opened and interrogated further.

Of course, it won’t tell us if it’s the right FAQ. It could be an old document – for example, a copy we downloaded a few years ago that’s been superseded; it gives us enough context to make that determination, but not enough to know if there’s a better version out there.

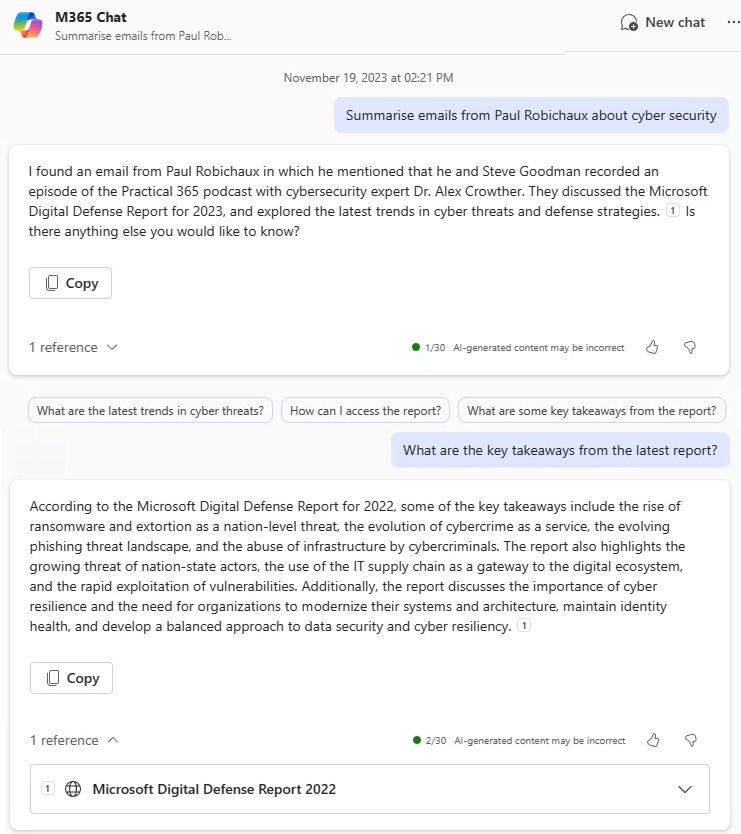

In Figure 7, I ask a question about my emails from Paul Robichaux. You could be mistaken for thinking this was answered very well, and it started out OK.

Its success was short-lived – it understood that we were talking about a report from 2023 and even prompted us to ask about key takeaways from the aforementioned report.

However, it then used a copy of the 2022 report that was shared in a SharePoint site collection unrelated to the email thread to answer:

Of course, this is down to data quality issues and you could say that managing a document’s lifecycle is important regardless of whether you use Copilot; in this case, however, I disagree – keeping previous versions of a report or document for reference is common and often has a purpose, like being able to reference whether a vendor or supplier did meet their goals. It’s also a behavior, that because people do keep copies of documents in their OneDrive, you won’t be able to easily avoid.

Final thoughts

While there are aspects of Copilot that are frustrating, a lot of this points back to Microsoft demonstrating a vision that might be 1-2 years away rather than demonstrating a realistic vision of what they could actually accomplish by GA. If Copilot in its current form had been demonstrated as the vision we’d have been just as impressed, as it is impressive.

To make the best use of Microsoft 365 Copilot you do need to know its capabilities and pick tasks that are within its grasp; working in context within a document is one area where it exceeds expectations, especially when asking questions about the content of a document, spreadsheet, and so on. It is easy to imagine that people who use it will leverage it as a general, hard-to-quantify time-saver that is less about summarising a document and more about asking questions about the bits of the document that matter to them instead of reading the whole thing.

For most tasks that involve search and rely upon good results, the benefit of the commodity that Copilot offers relies very much upon how well your underlying information architecture was set up. If you imagine SharePoint Online as a physical library – does yours have books thrown all over the place and in the wrong sections, or is it neat, tidy, and organized? I’ll bet that for most organizations – it’s the latter.

Thanks – it is very helpful

Thanks, this is one of the first real reviews I have seen! I shared in on MS Tech Community

Thanks for a look behind the curtains of the hype.