Some Information Security Considerations to Note Before Deployment Begins

Copilot for Microsoft 365 redefines the way people work with the Office apps, or so that’s the promise made by Microsoft. The AI assistant introduces new challenges for administrators in terms of safeguarding sensitive information. As administrators, our goal is to ensure Copilot usage is safe, secure and complies with organization standards.

Perhaps you’ve heard that Copilot for Microsoft 365 can access content that individual users have (at minimum) view access to. For example, if a user can view a SharePoint document, Copilot, under that user’s context, can also process it. While this is true, it is an oversimplification: with protected content, view access for users does not always guarantee access for Copilot.

This article examines how Copilot for Microsoft 365 handles protected content, including how it interacts with Microsoft Purview sensitivity labels. Hopefully, this information will help administrators to configure information protection controls and understand their impact in relation to Copilot for Microsoft 365.

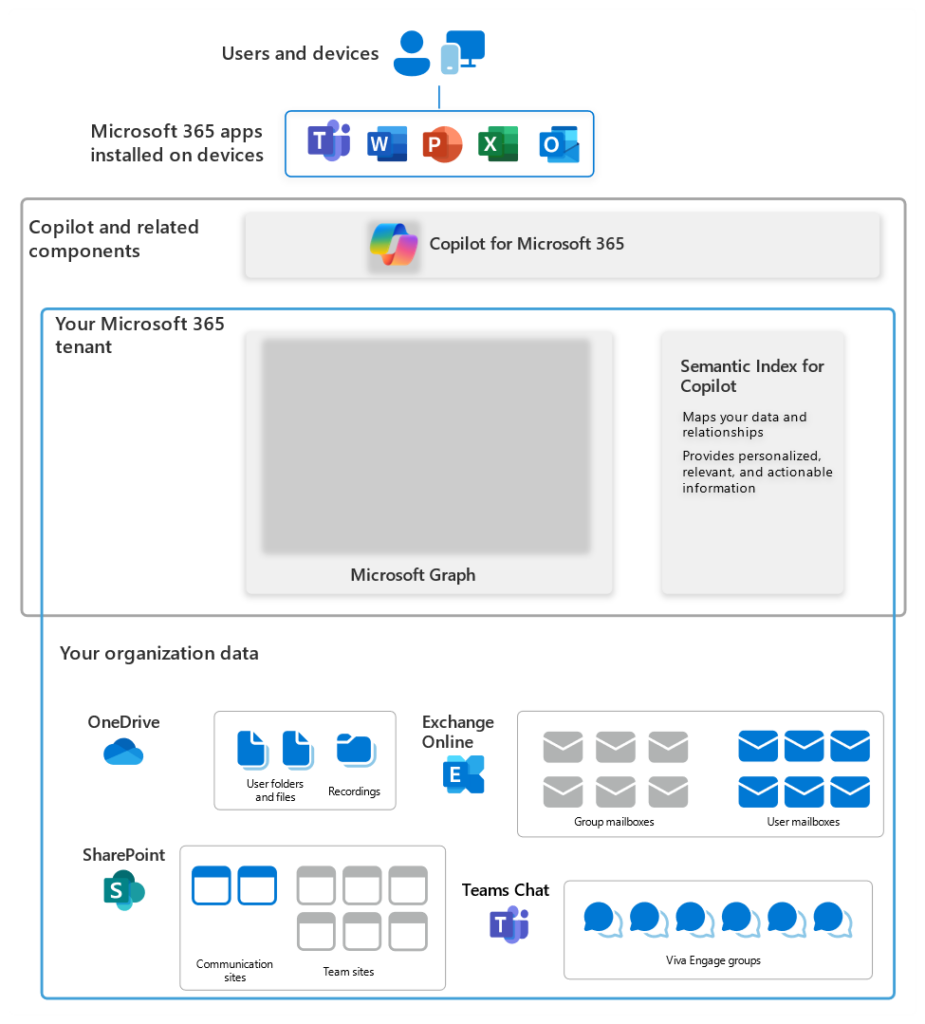

What Does Copilot for Microsoft 365 Have Access To?

Copilot for Microsoft 365 gains access to content in Microsoft 365 via Microsoft Graph (Figure 1 ) and is designed to adhere to the information access restrictions within a tenant. This Microsoft article explains how Copilot for Microsoft 365 works in depth, including how it accesses content.

Administrators and end users control access to information in Microsoft 365 through permissions and privacy settings (SharePoint Online and OneDrive permissions, Microsoft 365 Group membership and privacy settings, i.e., Public or Private). Optionally, organizations can use encryption (Microsoft Information Rights Management (IRM), Microsoft Purview Message Encryption, S/MIME email encryption, and Microsoft Office password protection), but Copilot doesn’t support every method that an organization might use to protect data

Let’s explore the encryption methods that present challenges for Copilot for Microsoft 365…

Encrypting Content in Microsoft 365 with Copilot for Microsoft 365

To ensure that Copilot for Microsoft 365 and encryption work smoothly together, administrators should consider which methods are used to encrypt content in Microsoft 365 – some commonly used encryption controls are partially or completely unsupported by Copilot and will impact its ability to return content.

The Azure Rights Management service (Azure RMS) is fully supported by Copilot for Microsoft 365. While Microsoft Information Rights Management (IRM), Microsoft Purview Message Encryption (OME), and sensitivity labels all use Azure RMS, organizations should focus on OME and sensitivity labels as the basis for protection of sensitive material in tenants where Copilot is active.

S/MIME encryption and Double Key Encryption (DKE) are completely unsupported for Copilot for Microsoft 365: Copilot does not handle S/MIME-encrypted emails, and DKE-encrypted data is not accessible at rest to any Microsoft 365 services. Copilot will not return content protected by S/MIME or DKE, and cannot be used in apps when that protected content is open.

Microsoft Office password protection is only partially supported for Copilot for Microsoft 365. Password-protected documents can only be accessed by Copilot while they are open in the app. For example: users can open a password-protected Excel document and use Copilot inside of Excel, but they cannot open Microsoft Word and use Copilot to create a document using the data that is open in Excel. Administrators can use GPOs or Intune to restrict users from being able to password-protect Office files.

How Copilot for Microsoft 365 Works with Sensitivity Labels

Sensitivity labels support the classification and protection of Office and PDF content (read this article for a deeper dive). Protected documents have metadata for the label that protects the document, including the usage rights granted to different users. When a user opens a document, the Information Protection service grants the usage rights assigned to the user based on the set of rights definitions in the label.

In order for Copilot to use information to ground a prompt – whether a user provides a reference document for explicit grounding, or Copilot finds the information via Graph queries – labels must grant the VIEW and EXTRACT usage rights to the user on whose behalf Copilot is processing a protected document.

In a couple of cases, Copilot can process content with only the VIEW usage right enabled:

- If a user applies restrictions to content with Information Rights Management, and the content also has a sensitivity label that does not apply encryption, content can be returned by Copilot in chat.

- In Copilot in Edge and Copilot in Windows, Copilot can reference encrypted content from the active browser tab in Edge (including within Office web apps). To restrict this completely, configure Endpoint DLP to block “Copy to clipboard” activity for sensitive content. Even with this setting configured to “Block” or “Block with override”, copying is not blocked when the destination is within the same Microsoft 365 Office app. The result: users cannot leverage Copilot in Edge or Copilot in Windows to circumvent restrictions when they are only granted VIEW usage rights to a document, and Copilot for Microsoft 365 is not hindered.

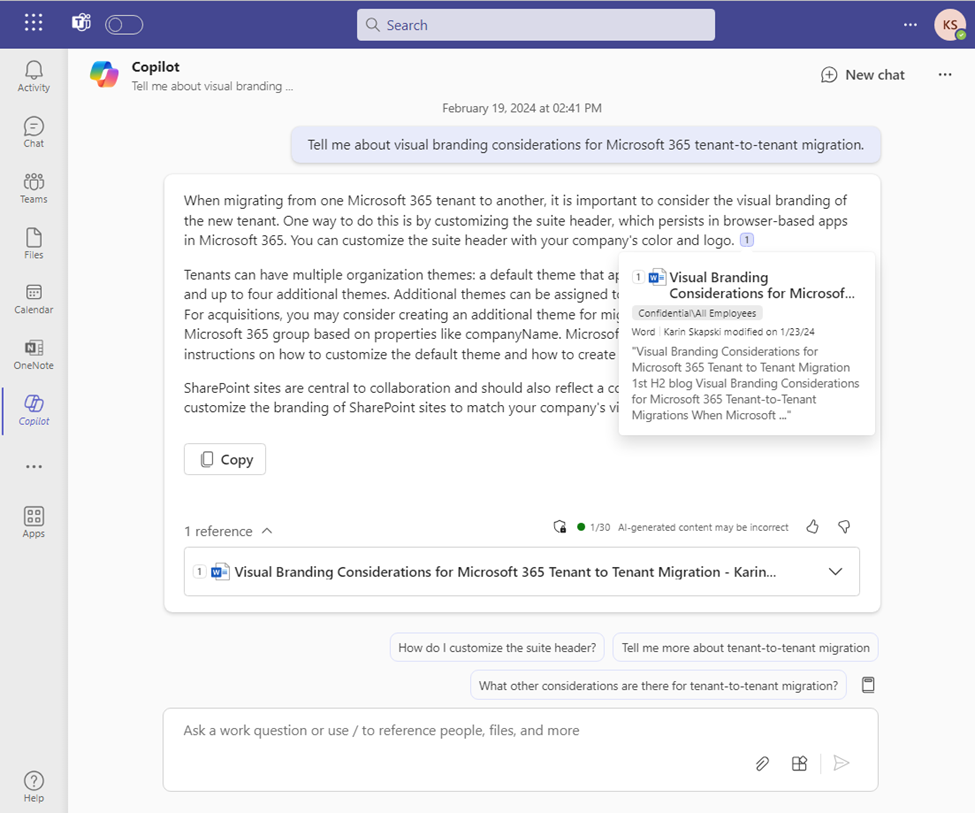

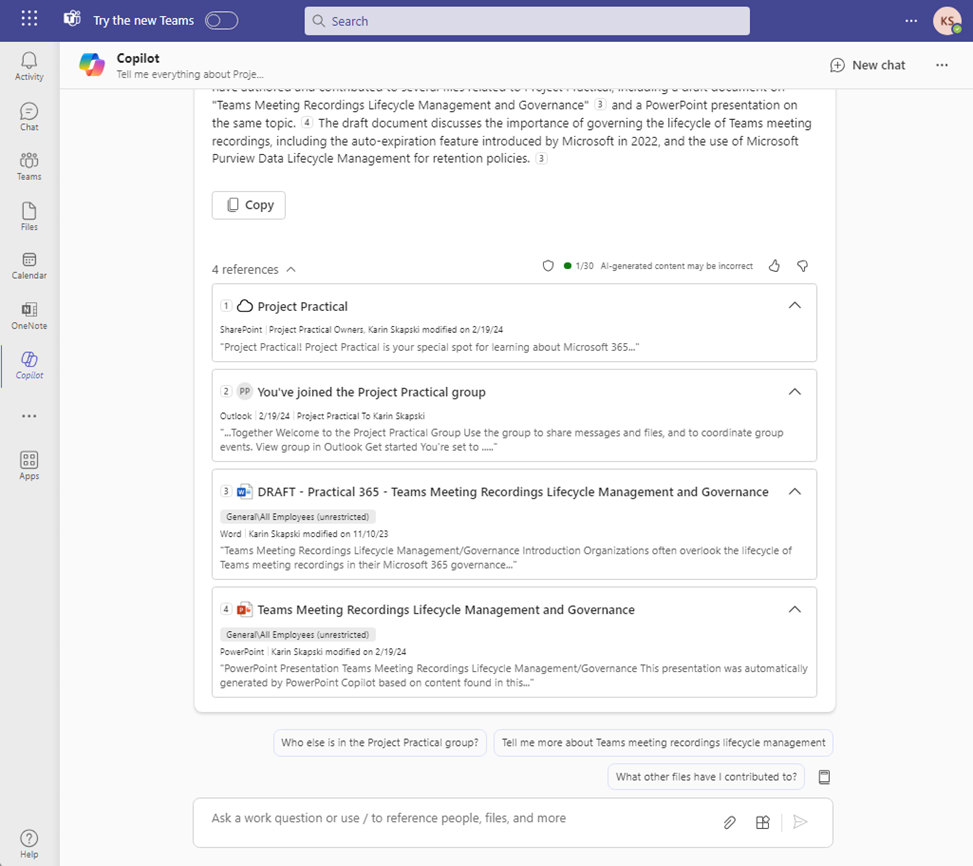

Copilot uses sensitivity labels for the output it produces. In Copilot for Microsoft 365 chat conversations, sensitivity labels are displayed for citations and items listed in responses (Figures 2 & 3).

A few scenarios do not support sensitivity labels:

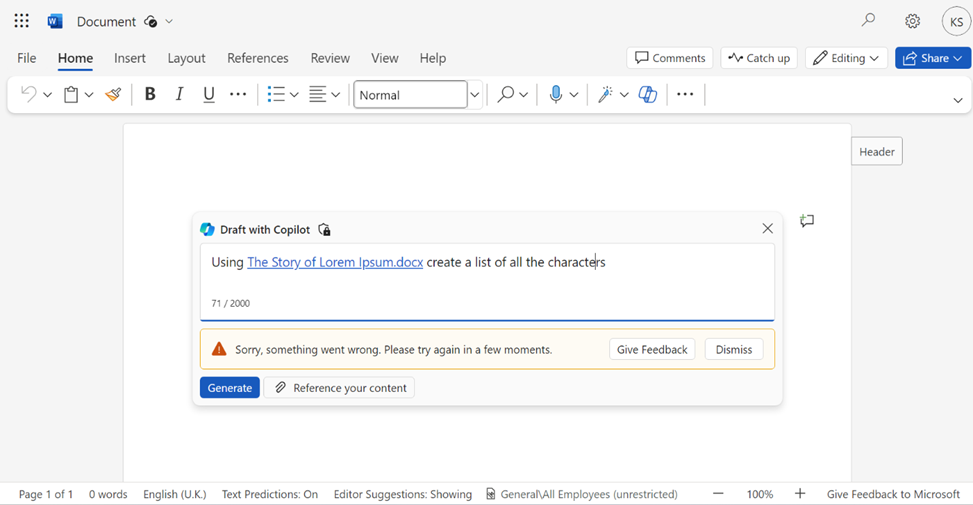

- Copilot can’t create PowerPoint presentations from encrypted files or generate draft content in Word from encrypted files.

- In chat messages with Copilot for Microsoft 365, the Edit in Outlook option does not appear when reference content has a sensitivity label applied.

- Sensitivity labels and encryption that are applied to data from external sources, including Power BI data, are not recognized by Microsoft 365 Copilot chat. If this poses a security risk for your organization, you can disconnect connections that use Graph API connectors and disable plugins for Copilot for Microsoft 365, although this will prevent you from extending Copilot for Microsoft 365, limiting the value your organization gets from Copilot.

- Sensitivity labels that protect Teams meetings and chat are not recognized by Copilot (this does not include meeting attachments or chat files which are stored in OneDrive or SharePoint).

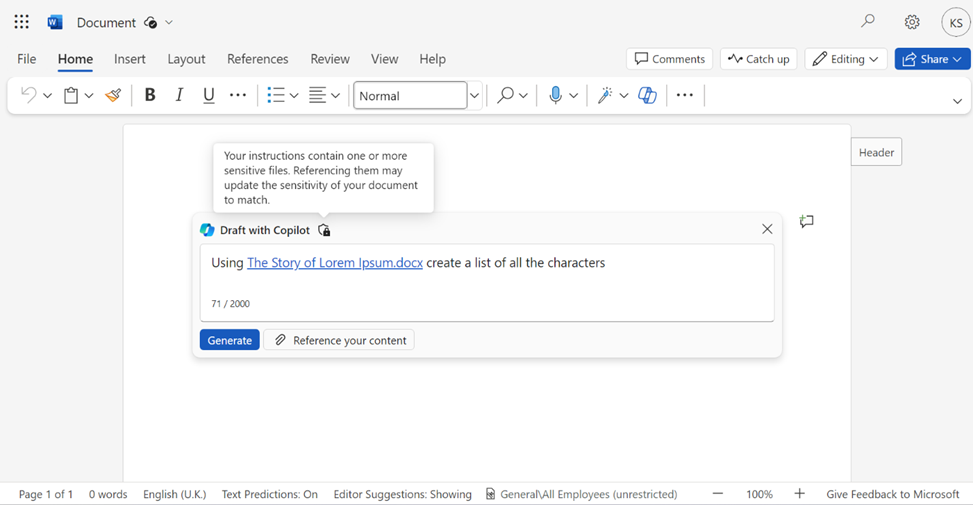

In Word, Copilot can use up to 3 reference documents to ground a prompt. While Copilot cannot generate draft content from encrypted files, it can generate content from labeled content that isn’t encrypted.

When you use Copilot for Microsoft 365 to create content based on data with a sensitivity label applied, the content you create inherits the sensitivity label from the source data. When multiple reference documents are used that have different sensitivity labels applied, the sensitivity label with the highest priority is selected.

This table outlines the outcomes when Copilot applies protection with sensitivity label inheritance. Figures 4, 5, and 6 show this experience:

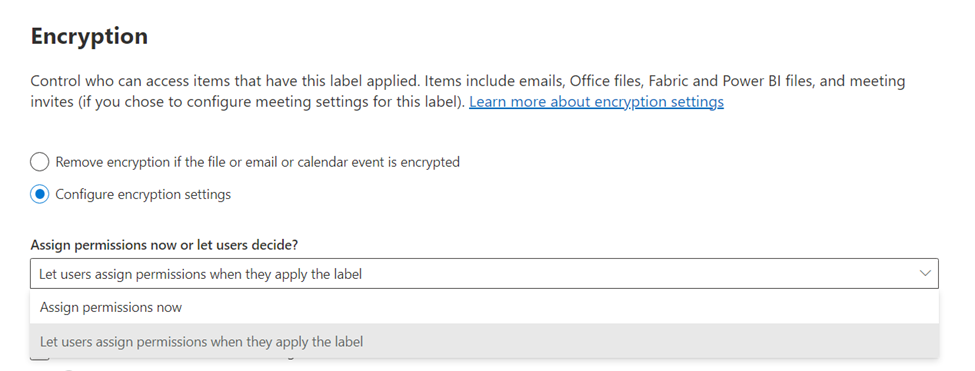

User-Defined Permissions are Not Recommended

Encryption settings for sensitivity labels restrict access to content that the label will be applied to. When configuring a sensitivity label to apply encryption, the options for permissions are:

- Assign permissions now (determine which users get permissions to content with the label as you create the label)

- Let users assign permissions (when users apply the label to content, they determine which users get permissions to that content)

When items are encrypted with “Let users assign permissions” (Figure 7), no one except the author can be guaranteed to be granted usage rights sufficient for Copilot access.

When users can assign permissions, they may not know or remember to enable EXTRACT usage rights. They may simply apply VIEW rights to content, or use predefined permissions that do not include EXTRACT rights, like Do Not Forward. Because the user who applies encryption gets all usage rights automatically, when they interact with Copilot for Microsoft 365, it returns content to them as intended. The user who assigned the permissions will not realize that the usage rights are misconfigured, while other users, who they may have intended to grant access to, will not be able to interact with the content through Copilot for Microsoft 365.

Strike a Balance Between Security and Usability

By understanding and managing Copilot’s capabilities within the realm of sensitivity labels and protected content, we can harness its full potential without compromising on information security.

My recommendations for fellow administrators dealing with Copilot in Microsoft 365 are straightforward: Use OME and sensitivity labels wherever encryption is needed, and make sure to keep up with the latest on Copilot for Microsoft 365 and tweak your security game plan as things evolve.

Lastly, don’t forget to loop in your users. A little education goes a long way in making sure they know the importance of labeling data correctly, being careful when Copilot references sensitive information, and understanding Copilot’s limitations with encrypted content.