Deep Dive into Copilot for Microsoft 365

Two of the best ways to learn how things work are to attempt to break it or to build your own. I’ve been on the preview for Microsoft 365 Copilot for several months with the goal of understanding what the many businesses I work with will need to do to prepare, roll out, and manage it. After Copilot for Microsoft 365’s launch last month, I’m finding the documentation isn’t terrible – but in true Microsoft form it tries to obfuscate common AI concepts, and useful information is hidden within overexploitation.

If you are an IT manager or IT pro and are planning to deploy Copilot for Microsoft 365, then first and foremost; you need to learn generative AI concepts, and your users do need to understand how to prompt. When it comes to the quality of text generated based on a user’s prompt, Copilot works pretty much like any other application or web app that uses GPT-4 to generate text from a user’s prompt.

Of course, there’s more to Copilot for Microsoft 365 than just the generation of text; but rather than parrot Microsoft’s “how it works” sessions or documentation, I’m going to cut through Microsoft’s own terms for things, and highlight things that are important to know.

Copilot Services

Copilot for Microsoft 365 is effectively several web apps presented that are embedded into Microsoft 365 that work against common backend services; with each app (such as Copilot for Word or M365 Chat) possessing a different set of capabilities.

Currently, each app works on its own; so if you want to ask Excel to convert a table from a worksheet into a Word Document it can’t.

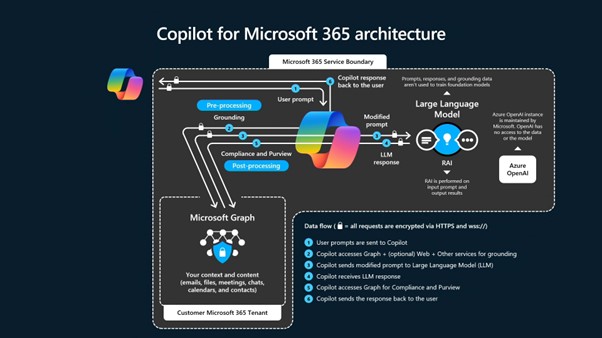

The backend services have several components, but the most important two are:

- A Retrieval Augmented Generation (RAG) Engine – effectively what’s shown in Figure 1, that includes the capabilities to search Microsoft 365 via the Graph API or bring in information from an open or linked document, and add results to the GPT user prompt behind the scenes, in response to a prompt from a user.

- An Office “code generator” that performs orchestration of actions in Office – This adds a permissible set of pseudo code known as the Office Domain Specific Language (ODSL) to the prompt sent to the Large Language Model (LLM) in apps like Word, Excel, and PowerPoint. It then validates the resulting ODSL pseudo code generated by the LLM and compiles it into Office JavaScript or internal API calls to automate.

Retrieval Augmented Generation is used in different ways because, in apps like Word, you will be working with the information in the document that’s open; but in Microsoft 365 Chat, you’ll almost always be searching across enterprise or other data.

Other data includes Bing search results (in which case your question/prompt will be sent to outside of Microsoft 365 to Bing) or Microsoft 365 Search extensions. These can include Azure DevOps, Salesforce, Dynamics CRM, CSV files and SQL databases, local file shares, and any intranet or public websites you own and want to index.

The purpose of Retrieval Augmented Generation is extremely important to how Copilot works. Microsoft isn’t fine-tuning an LLM complex that is expensive and may not respect permissions. Instead, they do something known as grounding, giving the LLM (GPT-4) accurate information it needs, alongside your prompt, so that the LLM’s purpose is to form words and sentences based on your data, rather than based on the data it was trained against. This makes it less susceptible to writing confidently incorrect answers, known as hallucinations.

How Copilot Works with Documents

The Office “code generator” is how Copilot orchestrates actions like creating PowerPoint presentations from Word documents or adding PivotTables to Excel workbooks.

From watching Microsoft’s Ignite session on how Copilot works, you could be excused for understanding that a proportion of the “code generation” is on the client side – such as the compilation of ODSL into native Office Javascript or internal API calls.

However, from what I’ve seen, Copilot appears to work almost entirely service-side, similar to the way Office scripts work. Even with a Copilot license, using the full version of Word or PowerPoint, it won’t work in a document stored on the local PC, another tenant, or your personal OneDrive.

Examining the traffic using Fiddler indicated that it’s likely that when you ask it to perform an action, such as to summarize the current document, it accesses the document remotely from the copy stored in the cloud, rather than providing the document text from the client itself.

This observed behavior supports the requirement for OneDrive when using Copilot, but also means that there are scenarios that might be confusing for users when working across multiple tenants, or when using features like Copilot in Teams to summarize what’s happening in an externally-organized meeting where it won’t work and will feel inconsistent.

Documents and other items, like emails, used by Copilot must be accessible by the user, both for them to be found by Search, and for any data to be included in a response. Items are searched for when using Microsoft 365 Chat, but not in context when using Copilot in Word, Excel, PowerPoint, and other “in context” experiences; instead, you need to reference a file to include.

Protected documents, such as those with Sensitivity Labels protected by Information Protection, must allow the user to copy from it. For example, if they have access to, and can copy and paste the data into a new document themselves, then Copilot will be able to do it. This ability to copy is termed “extract” from the admin’s perspective in Purview Information Protection.

How Semantic Index Works with Microsoft 365 Search

Microsoft has produced a lot of explainer videos on Semantic Index and from watching them – which you absolutely should – you could be under the impression that it is a replacement for Microsoft 365 Search. It isn’t; it complements it by adding extra information behind the scenes before the search is undertaken.

What it does is widen the scope of searches undertaken by Copilot (and users) by adding contextually relevant and similar keywords to the underlying search, so that it includes more relevant items as the highest-ranking results. Copilot then uses the result from the Graph API call to “ground”

For example, if you asked M365 Chat to “Find emails about when my flight is scheduled to land”, it might add terms such as “schedule,” “itinerary” and “airport” to the keywords. However, because it’s context-based it wouldn’t add words like “earth” and “ground” like it might if you asked it “How are our land and soil surveys progressing?”.

How Copilot Works with Azure OpenAI

Microsoft claims the GPT models are hosted within the Microsoft 365 compliance boundary and also use Azure OpenAI, and no data is kept for monitoring or training usage from your Copilot usage. With Azure OpenAI, you create a deployment for a model in a specific Azure region and this remains static.

For Copilot in Microsoft 365, the LLM requests are served out of multiple regions, subject to local regulations. This would indicate that your tenant doesn’t get its “own” deployment, even if Azure OpenAI deployments are a concept relevant to Copilot.

Each underlying OpenAI API request is an end-to-end stateless transaction; it has to include the chat history, the context and guidance from the system prompt (what Copilot sends before the user prompt), and guidance on what the output should be, such as providing the ODSL language. Once the response is generated, the Azure OpenAI service has no reason to remember what happened and technically the LLM can process each entire request only in memory.

Although logging of requests is possible with Azure OpenAI, Microsoft has stated that abuse monitoring normally in effect is opted out of for Copilot. Instead, Copilot keeps records of prompts from users within the Exchange Mailbox similar to Teams chat messages, with the same compliance controls used to govern their retention.

In addition to compliance controls and document controls from Purview, part of the Copilot service includes its own additional set of guardrails that cannot be customized. These strict controls are designed to prevent a user from intentionally (or unintentionally) prompting Copilot to create what Microsoft believes could be harmful or unethical content, over and above the guardrails trained into the underlying model itself.

Although these aren’t documented, it appears likely that these are a mix of system prompting (such as Copilot asking the LLM to refrain from generating certain content) and post-processing analysis. In general use, a user won’t see more restrictions than they would when using ChatGPT, but they may see different language used in the refusals to answer particular questions.

More Changes to Come

By observing Copilot in Microsoft 365, you’ll notice that it performs a level of quality analysis to outputs; this means it’s likely that in many circumstances your question to Microsoft 365 chat will result in several actual prompts to GPT-4, with the retrieval augmented generation process expanding it’s scope to additional areas, such as a web search, if the result is not effective. No doubt this will change as time goes on – as will many other aspects of how Copilot works over the coming months, so expect us to revisit this topic several times.

Great, insightful article.

What criteria or factors does it consider to rank search results?

How to manipulate/optimize semantic index within organization using Copilot M365?

You can’t manage or amnipulate the semantic index. As to ranking, that concept doesn’t really exist as it’s more applicable to keyword searches. Everything depends on the quality of the prompt fed to the LLMs.

Is it an absolute requirement to have online services like Teams, Exchange Online, OneDrive for Business or Sharepoint Online in order to use M365 Copilot? I guess certain features will just not work, right? Like creating a PowerPoint from Word, since the data source must be online, not local. What is your take on that?

Yes. Copilot for Microsoft 365 depends on online services. For instance, you can’t use Copilot if your Exchange mailbox is hosted on-premises. And although Copilot can process the content of files stored outside SharePoint Online and OneDrive for Business when that data is loaded into apps (for instance, a document stored locally is loaded into Word), only information stored in online repositories can be used to ground prompts.

So does this also mean Copilot (formerly Bing Chat) will not work? This is now part of the service plan of copilot for M365

No. The Office apps connected to Copilot for Microsoft 365 depend on an Exchange Online mailbox. Copilot chat doesn’t use Microsoft 365 data.

Great article, thanks for sharing your learnings Steve.

@Quentin: find it in the official MS documentation, https://learn.microsoft.com/en-us/microsoft-365-copilot/microsoft-365-copilot-overview

Would it be possible to get the image “Copilot for Microsoft 365 architecture” in a better resolution? Thanks a lot for the article btw !