Blocking Access to File Content in Different Ways

Some time ago, I wrote about a new sensitivity label setting to block access to content services. The feature uses an advanced label setting that must be configured using PowerShell. The makeover of the Purview portal doesn’t support allowing administrators to enable the setting through the current interface.

The idea is that if you block access for Microsoft content services to documents, then functionality like Microsoft 365 Copilot (and DLP policy tips in Outlook and some other features) that depend on using content services to process information won’t be able to use the blocked information in the documents. The sensitivity label setting creates the block by stopping content services from processing the content of labeled files.

Updating the Sensitivity Label Report Script

A reader pointed out that the article should include some PowerShell to help people who configure a label know that updating the setting worked. That was an easy fix. But it led me to check if the script to generate a report about sensitivity label settings included the block content service setting. The script didn’t, so I had to update it to highlight sensitivity labels that contain the block. If you use the script, please fetch the updated code from GitHub (link in article referenced above).

The Role of the Semantic Index

At roughly the same time, a discussion in the Viva Engage community hosted by the Microsoft Information Protection team reported that some documents protected by a label that blocked access to content services appeared in Microsoft 365 Chat (BizChat) results. The question is how could this happen? If a label blocks access to content services, documents should be invisible to Microsoft 365 Copilot queries. At least, that was my assumption.

As it turns out, I was dead wrong. Controlling access to file content has nothing to do with searching. Copilot can find documents by reference to Microsoft Search and the semantic index. I hadn’t waited long enough to allow the semantic index to ingest newly created documents. When I checked, all Microsoft 365 Chat had to search against was the Microsoft Search index populated with document metadata. If a query didn’t find anything in the Search index, the document was invisible to Microsoft 365 Chat, or so it appeared.

Microsoft says that the semantic index connects users “with relevant information in your organization.” But like every other index, the semantic index must be maintained and updated with new material, and unwanted material must be removed. This work is done by background processes and it’s not predictable how long it takes to update the index for new or updated documents. Eventually the stars aligned, and Microsoft 365 Chat could find the documents I thought were blocked.

Microsoft 365 Chat Can Find Labelled Documents

The current Microsoft documentation makes it clear that “Although content with the configured sensitivity label will be excluded from Microsoft 365 Copilot in the named Office apps, the content remains available to Microsoft 365 Copilot for other scenarios. For example, in Teams, and in Graph-grounded chat in a browser.” I don’t think this documentation was online when I wrote the article, but I cannot be sure. When Microsoft talks about, “Graph-grounded chat,” it means Microsoft 365 Chat. The named Office apps are Outlook, Word, Excel, and PowerPoint.

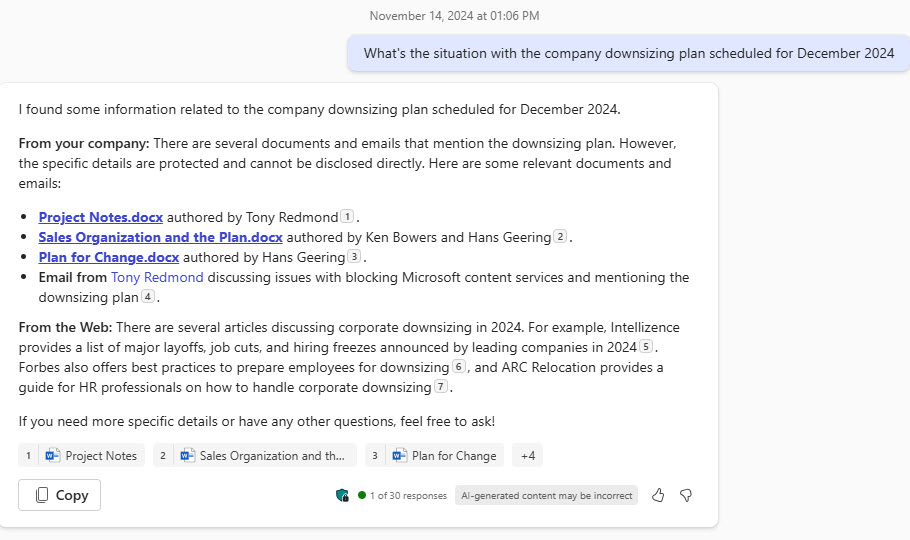

Figure 1 shows an example of a Microsoft 365 Chat interaction. The response reveals several documents about a sensitive topic that are assigned a sensitivity label that blocks access to content services. Copilot reports that the documents are available but protected (Copilot doesn’t have the right to extract the content), so it cannot reveal any details. Remember, Copilot acts in the context of the signed-in user, so these documents are available to the user through site membership or sharing links. The user can open any of the highlighted document by clicking on its link.

The only time that the sensitivity label blocks Copilot is when information from an Office document needs to be sent to content services for processing. The automatic summary generated by Microsoft 365 Copilot for Word documents is an example of this kind of processing.

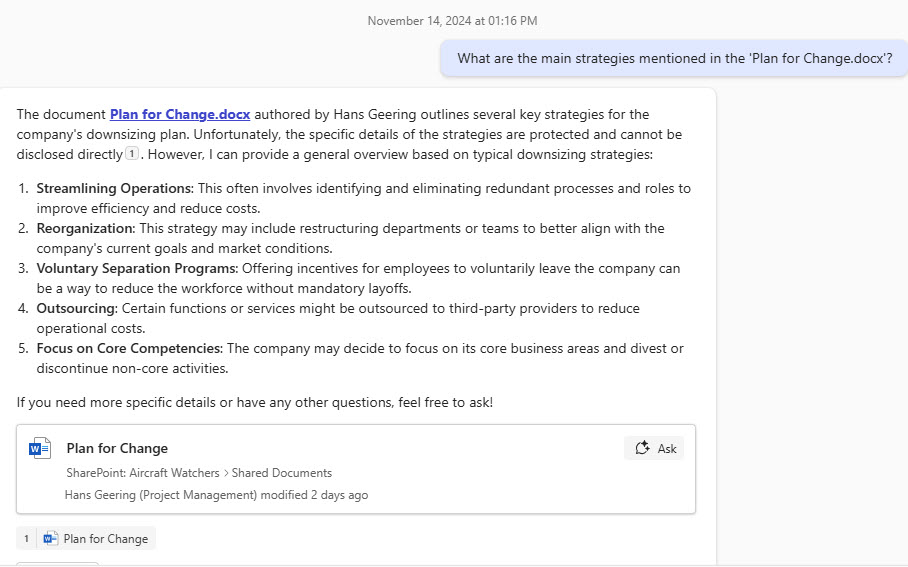

Another is when Microsoft 365 Chat needs to include content from a protected document in some generated text. For instance, when Copilot is asked to summarize the main points from a document. At this point, Copilot cannot use the document to ground the prompt it sends to the LLM because the sensitivity label blocks its attempt to extract the information (Figure 2). In this scenario, Copilot can still generate a response, but the information in that response comes from the LLM and anything it can find through a Bing search of websites. In fact, none of the main strategies reported by Copilot in Figure 2 are in the referenced (protected) document, which is why Copilot prefixes its generated text as “a general overview.”

The important point is that blocking access to content services by assigning a sensitivity label has no effect on searches performed by Microsoft 365 Chat. Copilot can find and include documents in its responses, but Copilot cannot perform further processing like summarizing a document, answering questions about what a document contains, or extracting content to reuse it in generated text.

The Effect of the Block Download Policy

One of the features licensed by SharePoint Online Advanced Management is a block download policy. The effect of the policy is to force users to work with documents online because they cannot download copies to work on temporary local copies, which is what happens with Word, Excel, and PowerPoint. In addition, features like printing are also disabled. The Block download policy is intended to control file access in sites holding very sensitive material.

As I worked with Microsoft 365 Copilot, I noticed that some of the files that it found were reported as protected even though the files had no sensitivity label. After some testing, it appears that the block download policy removes the right to extract content, which is exactly what the sensitivity label setting to block content access does. The net result is that Copilot treats files from sites where the block download policy is in force as “protected” documents that can be found but not processed thereafter. The same is true when a conditional access blocks access to SharePoint Online files from unmanaged devices.

The new “Microsoft Purview Data Loss Prevention for Microsoft 365 Copilot” (announced at Ignite 2024) supports a new DLP rule action to block access to document content by Copilot. The same approach is used: when DLP detects an attempt by Microsoft 365 Chat (the only app currently supported) to use a document with a specific sensitivity label, it removes the right for Copilot to extract document content, which means that Copilot can’t summarize documents or use the document content in responses. The right to extract content is fundamental to all the solutions that stop Copilot using document content.

File Metadata and Searching

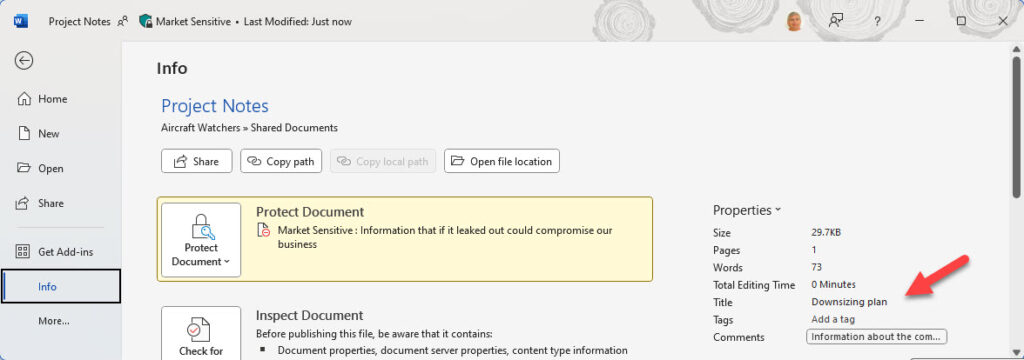

Even if Copilot might not be able to use document content in its responses, it absolutely can find sensitive documents. Another complication that users sometimes don’t understand is that document metadata is indexed by Microsoft Search and is therefore always available to Copilot. For instance, if phrases like “downsizing plan” are included in a document title (Figure 3) or title, the documents can be found by Copilot when it interrogates Microsoft Search.

There’s nothing strange here. Sensitivity labels have never protected file metadata. If you use sensitivity labels to encrypt file content, only the content is protected. The file metadata remains clear and available for indexing. If this didn’t happen, users wouldn’t be able to search for protected documents and Microsoft 365 solutions like Purview eDiscovery wouldn’t work.

The only solution to preventing Microsoft 365 Copilot from finding information using metadata is to limit search. You can do that by blocking site or document libraries from search results. Restricted SharePoint Search works by limiting Copilot (and everyone else) to searching 100 curated sites.

Takeaways and Lessons Learned

Based on what I now know, the takeaways are:

- Don’t include metadata in sensitive files that might help intelligent agents find those files. Make sure that document titles and subjects do not reference any keywords that people might use to find files.

- Help users to understand what Copilot means when it refers to “protected” files.

- Consider protecting files containing confidential material with sensitivity labels with user-defined permissions. Copilot queries cannot access these files because their content is not indexed. Unlike sensitivity labels with predefined permissions, SharePoint Online cannot resolve user-defined permissions to index the content. Labels with user-defined permissions are more complicated for end users because of the need to set permissions for each document, but these labels are a very effective block against content indexing. However, they won’t stop Copilot finding documents through the document metadata indexed by Microsoft Search.

- If you use Teams to organize information, consider storing confidential information in a private channel. The extra level of access control imposed by channel membership will stop non-members gaining access to the files stored in the channel.

Microsoft is working on other solutions to help control access to confidential information stored in SharePoint Online. According to information provided at a Microsoft session at the TEC 2024 conference, a new solution called Restricted Content Discoverability (RCD) will help by managing a deny list to stop Copilot accessing the content stored in complete sites. An Ignite announcement said that tenants with Microsoft 365 Copilot licenses will get SharePoint Advanced Management and be able to use features like RCD (early 2025).

Like every new technology, it takes time to master the details of administration. The process requires understanding of how technology is used by customers in real-life situations, complete with the imperfections of what those situations might throw up. The ability to control and manage Microsoft 365 Copilot is gradually becoming better as we all learn how to deal with the good and bad sides of generative AI.