SharePoint Online is an essential platform for modern enterprises, underpinning document management, collaboration, and organizational knowledge sharing at scale. As organizations expand their use of Microsoft 365, their document libraries can grow to tens or even hundreds of thousands of items. Without proper organization and optimization, these large libraries often cause performance issues, slower page loads, delayed search results, and user frustration. In some cases, organizations may encounter SharePoint’s built-in limits, such as the 5,000-item list view threshold, which adds further complexity to managing and accessing content.

This article presents some practical strategies to keep large SharePoint Online libraries responsive and manageable. We focus on the approaches that address the most common challenges and the performance issues that have the greatest impact on end users.It begins with foundational best practices, such as enabling the modern experience, indexing frequently filtered columns, and structuring content in filtered views to avoid list view throttling. The core focus then shifts to high-performance automation, providing actionable guidance and production-ready scripts using PnP PowerShell for bulk metadata updates. Finally, the article explores the Microsoft Graph API as a powerful alternative for performing these same updates. It shows how to leverage its direct control over data retrieval to provide administrators with another effective tool for scaling their libraries while maintaining high performance and reliability.

Common Challenges with Large Document Libraries

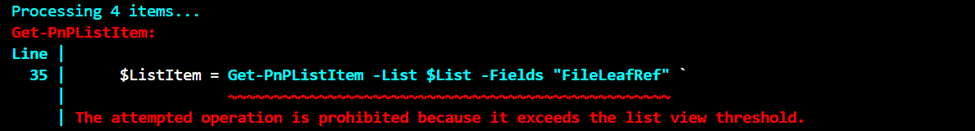

1. List View Threshold Limitations: SharePoint Online enforces a 5,000-item list view threshold for queries. While SharePoint supports libraries with millions of items, attempting to retrieve all items in a single query can result in throttling or query failure.

2. Slow Metadata Operations: Updating or reading metadata across thousands of files can take a long time, especially if processed item by item.

3. Search and Filter Performance: Queries that filter on unindexed columns can significantly increase retrieval time. Similarly, sorting or grouping on unindexed columns can trigger errors or delays.

4. Versioning and Storage Overhead: Libraries with multiple document versions accumulate storage quickly. Large numbers of versions increase library size and reduce responsiveness when browsing, downloading, or performing bulk operations.

5. Inefficient Bulk File Management: SharePoint Online is not designed to handle bulk operations across large numbers of files, which makes automation with scripts essential.

Best Practices for Library Configuration

Several library-level adjustments can significantly improve performance.

Enable Modern Experience

Modern SharePoint libraries are optimized for performance. They handle large datasets more efficiently than classic libraries, supporting faster rendering, client-side rendering of list items, and improved search indexing. Administrators should ensure all libraries with large content use the modern experience.

Index Frequently Filtered Columns

Columns that are commonly used in filters, sorting, or grouping should be indexed. Indexing enables the SharePoint engine to perform faster queries and reduces the chance of hitting the list view threshold. For example, indexing a “Project ID” column in a library with 50,000 files allows filtered views for specific projects to load quickly.

Split Content into Indexed Views

Rather than attempting to display all items in a single view, libraries should be organized into multiple views that filter and segment content based on indexed columns. This strategy ensures that each queryremains under the 5,000-item threshold, avoiding throttling issues while still providing quick access to relevant files.

Automating Large Library Operations with PnP PowerShell

PnP PowerShell is an open-source, community-maintained module that provides a wide range of cmdlets for working with SharePoint Online. It provides cmdlets for deep SharePoint integration, including operations on lists, libraries, views, and content types.

Connecting to SharePoint Online

Before performing operations, administrators must establish a connection using an app registration for app-only authentication:

Connect-PnPOnline -Url "https://yourtenant.sharepoint.com/sites/yoursite" -ClientId "<ClientID>" -Tenant "<TenantName>" -Thumbprint "<CertificateThumbprint>"

This app-only authentication lets PnP PowerShell run unattended with a service principal, avoiding user sessions and improving reliability for headless jobs. Use this method for tenant- or site-level admin tasks such as provisioning, inventory/reporting, and permissions changes that don’t depend on a user’s identity.

The Challenge with Simple Loops: A Common Pitfall

One way to handle bulk updates in SharePoint is to read input from a CSV file and loop through each item, updating them one by one. While this logic is straightforward, it contains critical performance flaws that cause it to fail dramatically when applied to large libraries, leading to errors and timeouts.

Consider the following script. It attempts to read a CSV, find the corresponding file in a library, and update its metadata. If you haven’t used PnP.PowerShell in this tenant yet, start with Installing the Module and Preparing Your Environment. It walks through the one-time setup so you’re ready to run the examples below.

# Connect to SharePoint with Entra app registration

Connect-PnPOnline -Url "https://tenant.sharepoint.com/sites/sitename" `

-ClientId "<EntraAppClientID>" -Interactive

# Import CSV with filenames and new metadata

$ItemsToUpdate = Import-Csv -Path "./documents-to-update.csv"

foreach ($Row in $ItemsToUpdate) {

$FileName = $Row.FileName

$NewStatus = $Row.NewStatus

# Find the list item and update metadata

$ListItem = Get-PnPListItem -List "<LibraryName>" -Fields "FileLeafRef" `

| Where-Object { $_["FileLeafRef"] -eq $FileName }

if ($ListItem) {

Set-PnPListItem -List "<LibraryName>" -Identity $ListItem.Id `

-Values @{ "Status" = $NewStatus }

Write-Host "Updated: $FileName -> $NewStatus"

}

}

This script looks simple (the full script is available from GitHub), but it runs into three major roadblocks (Figure 1 shows one) when dealing with large libraries. First, it tries to download the entire library to find a file, which is like asking for the entire phone book just to find one number. SharePoint will shut this down immediately on any list with over 5,000 items.

Second, it updates files one at a time, creating thousands of individual server requests. This incredibly “chatty” process is slow and practically guarantees you’ll get throttled.

Finally, the script isn’t built to handle throttling; when it hits a limit, it simply crashes, leaving the job half-done.

To avoid these issues, we need a smarter approach designed for performance and reliability.

To solve the issues of throttling, query limits, and inefficient updates, the correct method is to use batch processing and intelligent error handling. The production-ready script is engineered to handle these challenges by:

- Using New-PnPBatch to group hundreds of updates into a single server request.

- Implementing a retry loop that automatically waits and re-sends a batch if it is throttled.

- Finding files efficiently by their direct server-relative URL instead of querying the entire list.

These changes make the code run significantly faster. It is recommended that you consider taking a similar approach for any large-scale operation in SharePoint Online.

# Connect to SharePoint

Connect-PnPOnline -Url "https://tenant.sharepoint.com/sites/sitename" `

-ClientId "<EntraAppClientID>" -Interactive

# Import CSV with files and new metadata

$rows = Import-Csv -Path "./documents-to-update.csv"

# Get reference to the library

$list = Get-PnPList -Identity "<LibraryName>"

# Process in batches

$batch = New-PnPBatch

foreach ($r in $rows) {

$fileName = $r.FileName.Trim()

$newStatus = $r.NewStatus

$fileUrl = "/sites/sitename/<FolderPath>/$fileName"

# Retrieve file and update its metadata in batch

$item = Get-PnPFile -Url $fileUrl -AsListItem -ErrorAction SilentlyContinue

if ($item) {

Set-PnPListItem -List $list -Identity $item.Id -Values @{ "Status" = $newStatus } -Batch $batch

Write-Host "Queued for update: $fileName -> $newStatus"

} else {

Write-Warning "File not found: $fileName"

}

}

# Execute batch updates

Invoke-PnPBatch -Batch $batch

Write-Host "Bulk update complete."

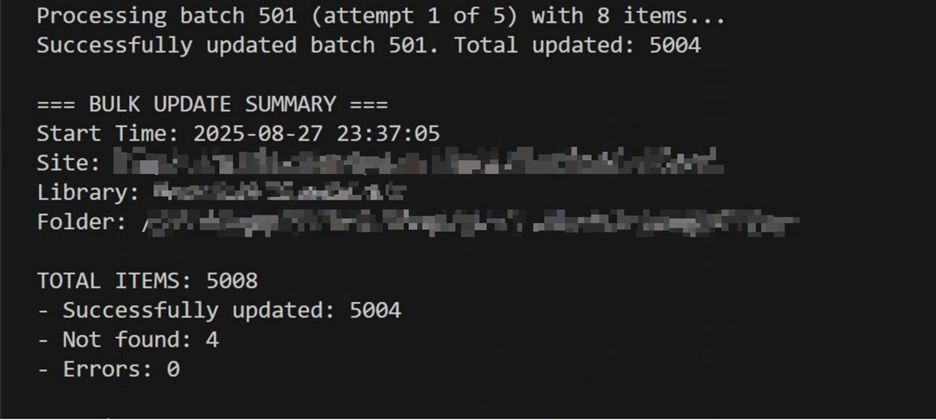

By optimizing PnP code (download the script from GitHub), administrators can perform large-scale updates confidently, knowing that the process avoids SharePoint Online’s limitations and provides clear feedback on its progress, as shown in Figure 2.

Leveraging the Microsoft Graph API

For administrators seeking full control over SharePoint Online operations, the Microsoft Graph API provides a REST-based interface to Microsoft 365 services. Unlike PnP PowerShell or the Graph PowerShell SDK, which wraps Graph API calls in convenient cmdlets, using Graph API requests requires handling HTTP requests, authentication tokens, and JSON payloads manually.

Before running Graph API scripts, a Microsoft Entra ID app registration is required. To access SharePoint sites, an app can use the Sites.ReadWrite.All Graph permission. However, following the principle of least permission, it’s usually better to use the Sites.Selected permission to restrict access to the sites that an app needs to use. Depending on what the app does, other Graph permissions might be required.

Authentication is handled via the client credentials flow. In this example, the script uses the app’s identifier and a client secret to obtain an OAuth 2.0 access token from Entra ID. Using a client secret is acceptable for testing, It’s a terrible idea for production, when it’s recommended that X.509 certificates are used to secure app-only access.

Using selective property fetches, administrators retrieve only the information needed, reducing payload sizes and speeding up queries. The following script authenticates with Microsoft Graph using an OAuth2 token and retrieves the SharePoint site ID along with the target list’s metadata. This demonstrates the essential first step in securely accessing and automating SharePoint resources programmatically.

# Get OAuth2 token from Microsoft Entra ID

$body = @{

client_id = $clientId

scope = "https://graph.microsoft.com/.default"

client_secret = $clientSecret

grant_type = "client_credentials"

}

$resp = Invoke-RestMethod -Method Post -Uri "https://login.microsoftonline.com/$tenantId/oauth2/v2.0/token" -Body $body

$Token = $resp.access_token

$Headers = @{

Authorization = "Bearer $Token"

"Content-Type" = "application/json"

}

# Resolve Site and List

$siteResp = Invoke-RestMethod -Headers $Headers -Uri "https://graph.microsoft.com/v1.0/sites/$siteHost:$sitePath"

$siteId = $siteResp.id

$listResp = Invoke-RestMethod -Headers $Headers -Uri "https://graph.microsoft.com/v1.0/sites/$siteId/lists?`$filter=displayName eq '$listName'"

$listId = $listResp.value[0].id

# Update metadata for one item by filename

$fileName = "Document1.docx"

$newStatus = "Approved"

# Find item by file leaf ref

$itemResp = Invoke-RestMethod -Headers $Headers -Uri "https://graph.microsoft.com/v1.0/sites/$siteId/lists/$listId/items?`$filter=fields/FileLeafRef eq '$fileName'"

$itemId = $itemResp.value[0].id

# Patch the metadata

$patchBody = @{

fields = @{

Status = $newStatus

}

} | ConvertTo-Json

Invoke-RestMethod -Method Patch -Uri "https://graph.microsoft.com/v1.0/sites/$siteId/lists/$listId/items/$itemId" -Headers $Headers -Body $patchBody

You can download the script from GitHub. The scripts are shared to demonstrate an underlying idea, not as a drop-in production solution. Please make sure you try the code out in a safe test environment before wider use.

Which Tool Should You Choose? PnP PowerShell vs. Microsoft Graph API

When deciding between automation tools, the choice is between simplicity and control. PnP PowerShell is a good tool for administrators who need to work with SharePoint and OneDrive data. Its cmdlet-driven approach is easy to use and specifically designed to simplify complex SharePoint tasks like batch updates. In contrast, the Microsoft Graph API allows developers to manage and interact with resources through direct REST calls, making it especially suitable for advanced, performance-critical scenarios or integration into custom applications. In summary, administrators should start with PnP PowerShell for efficient SharePoint Online and use Microsoft Graph for cross-cloud development that requires granular control.

Managing and updating metadata in large SharePoint Online libraries can quickly become challenging if done manually. Automation is key, and both PnP PowerShell and the Microsoft Graph API offer effective solutions. By leveraging batch processing, structured CSV inputs, and proper Entra ID authentication, administrators can perform bulk updates efficiently, avoid throttling, and maintain library performance. The examples shown here highlight the main logic, while full production-ready scripts with robust error handling are available on GitHub for reference.