Let’s Discuss Investments and Security

As can happen when browsing the internet, two interesting pieces of information came to hand this week. The first is a Goldman Sachs report titled “Generative AI: Too much spend, too little benefit?” (a redacted version is available online). The document is interesting because it reflects a growing concern that technology companies are overspending to keep pace with developments in AI. Microsoft continues to spend heavily on datacenters. In their FY24 Q4 results, the $19 billion of capital expenditure reported was mostly attributed to Cloud and AI. Satya Nadella said that roughly 60% of the investment represented “kit,” meaning the servers and other technology in the datacenters.

Analysts ask how companies like Microsoft will generate a return on this kind of investment. Microsoft can point to big sales, like the 160,000 Copilot for Microsoft 365 seats taken by EY. At list price, that’s $57.6 million annually, and Microsoft hopes that many more of the 400 million paid Office 365 seats will make similar purchases. At least, that seems to be the plan.

What I hear from tenants who have invested in Copilot for Microsoft 365 is that they struggle to find real data to prove that using generative AI is good for the bottom line. They can discover what kind of interactions users have with Copilot but can’t calculate savings. The data available at present is simplistic and doesn’t tell them how features like drafting documents and emails or summarizing Teams meetings or email threads work for actual users. Will an internal email that’s better phrased deliver value to the company? Will someone use the time spend not attending a meeting because Copilot generated an intelligent recap to do extra work, and so on.

More than License Costs to Run Copilot

It’s not just the expenditure on Copilot licenses that needs to be justified either. Additional support and training is needed to help users understand how to construct effective prompts. Internal documentation might be created, like custom mouse mats to remind users about basic Copilot functionality. Selecting the target audience for Copilot takes effort too, even if you base the decision on usage data for different applications.

And then there’s the work to secure the tenant properly so that Copilot doesn’t reveal any oversharing flaws. Restricted SharePoint Search seems like an overkill because it limits enterprise search to 100 curated sites. Archiving old SharePoint Online sites to remove them from Copilot’s line of sight seems like a better approach, especially as the content remains available for eDiscovery and other compliance solutions. A new sensitivity label setting to block access to content services also deserves consideration because it means that Copilot cannot access the content. Applying the label to the most confidential and sensitive files stored in SharePoint Online and OneDrive for Business is a good way to stop Copilot inadvertently disclosing their content in a response to a user prompt.

The bottom line is that the investment a Microsoft 365 tenant makes to introduce Copilot for Microsoft 365 cannot be measured by license costs alone. There’s a bunch of other costs that must be taken into consideration and built into IT budgets. The consequences of not backing Copilot up with the necessary investment in tenant management and security might not be pretty.

Copilot in the Hands of the Bad Guys

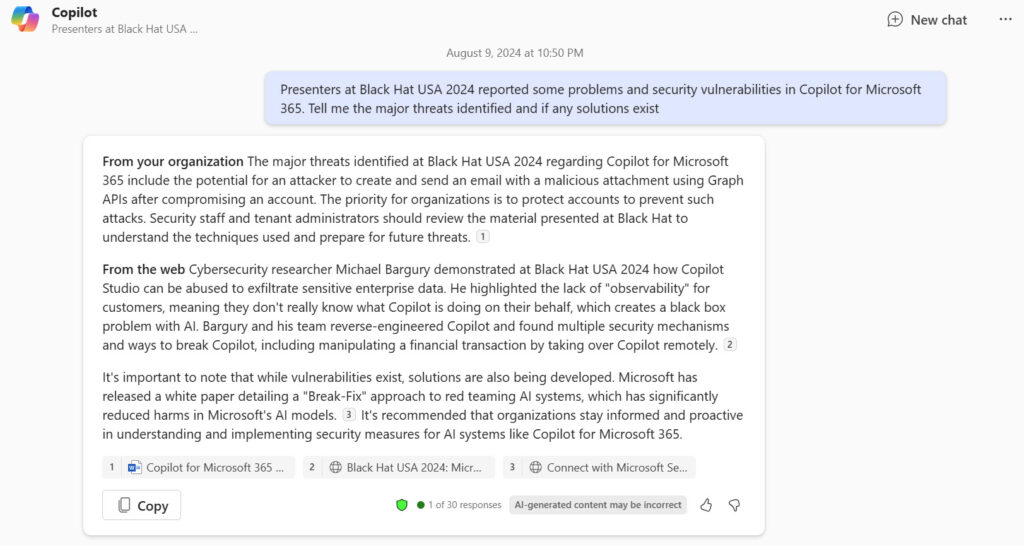

Which brings me to the second piece of information, a session from the Blackhat USA 2024 conference titled “Living off Microsoft Copilot” which promised:

Whatever your need as a hacker post-compromise, Microsoft Copilot has got you covered. Covertly search for sensitive data and parse it nicely for your use. Exfiltrate it out without generating logs. Most frightening, Microsoft Copilot will help you phish to move lately

Breathless text indeed, but it’s worrying that Copilot for Microsoft 365 is being investigated to see how it can be used for malicious purposes (links for the material featured are available here. A PDF of the presentation is also available.)

The presentation is an interesting insight into those who think about bending software to their own purposes. A compromised account in a Microsoft 365 tenant with a Copilot license is the starting point for the techniques reported in the presentation. If an attacker cannot penetrate a tenant to run Copilot for Microsoft 365, they can’t take advantage of any weakness in SharePoint permissions. But if they can get into an account, Copilot becomes an interesting exploitation tool because of its ability to find “interesting” information much more quickly than an attacker could do manually.

This is a good reminder that defending user accounts with strong multifactor authentication (like the passkey support in the Microsoft authenticator app) stops most attacks dead. Keeping an eye on high-priority permissions assigned to apps is also critical for tenant hygiene lest an attacker sneaks up using a malicious app and sets up their own account to exploit. Microsoft is still hardening systems and products because of their recent experience with the Midnight Blizzard attack. Tenants should follow the same path.

Accessing Documents with Copilot

In most cases, the techniques use Copilot for Microsoft 365 chat (Figure 1) to explore access to documents. The thing that always must be remembered about Copilot is that it impersonates the signed-in user. In general, any file that the signed-in user can access is available to Copilot.

Files containing salary information receive particular interest in the exploits, but I have got to believe that any document or spreadsheet holding this kind of information will be in a secure SharePoint site (perhaps even one that doesn’t allow files to be downloaded) and the files have a sensitivity label to block casual access by people who don’t have the rights to see this kind of information.

Some of the exploits feature files protected by sensitivity labels, which might seem alarming. However, a sensitivity label grants access based on the signed in user. If Copilot finds a labeled document and the label grants the signed-in user rights to access the content, opening and using the file is no more difficult than accessing an unprotected file. The new sensitivity label setting to block access to Microsoft content services stops Copilot accessing content, even if the user has rights.

The most interesting demonstrations are how to inject data into Copilot answers. That’s worrisome and it is something that Microsoft should harden to prevent any interference with information flowing back from Copilot in response to user prompts. Progress might already have happened because when I tried to replicate the technique, Copilot responded with:

“I must clarify that I cannot perform web searches or provide direct links to external websites. Additionally, I cannot convert answers into binary or perform actions outside of our chat.”

Email Spear Phishing

Apart from using Copilot to craft the message text in the style and words of the signed-in user (a nice touch) and finding their top collaborators to use as targets, the spear phishing exploit wasn’t all that exciting. It doesn’t take much to create and send an email with a malicious attachment using Graph APIs. Again, this is after an account is compromised, so the affected tenant is already in a world of hurt.

What Tenants Should Do Next

Presenting Copilot for Microsoft 365 at a conference like Black Hat USA 2024 automatically increases the interest security people have in probing for weaknesses that might exist in Copilot. The exploits displayed so far are not particularly worrying because they depend on an attacker being able to penetrate a tenant to compromise an account. Even if they get past weak passwords, the compromised account might be of little value if it doesn’t have a Copilot license and doesn’t have access to any “interesting” sites. But once an attacker is in a tenant, all manner of mischief can happen, so that’s why the priority must be to keep attackers out by protecting accounts.

The first thing organizations running Copilot for Microsoft 365 should do is have their security staff and tenant administrators review the material presented at Black Hat to understand the kind of techniques being used that might develop further in the future. Maybe even simulate an attack by practicing some of the techniques on standard user accounts with Copilot licenses. See what happens, look where you think weaknesses might lurk, and document and fix any issues.

In terms of practical steps that Microsoft 365 tenants should take, here are five that I consider important:

- Secure user accounts with strong multifactor authentication.

- Check apps and permissions regularly.

- Ensure permissions assigned to SharePoint Online sites are appropriate.

- Consider archiving or removing obsolete sites.

- Use sensitivity labels to protect confidential information and block Copilot access to these files.

And keep an eye on what happens at other conferences. I’m sure that Black Hat 2024 won’t be the last conference where Copilot for Microsoft 365 earns a mention.

The current AI tools have their uses (ignoring those for attackers!) but the technology is massively over hyped. Because of the hype, companies such as Microsoft have to invest big to keep their shareholders happy. If Microsoft don’t, the hype merchants will claim Microsoft are falling behind.