Apps, Data, Compliance, Privacy, Licensing, and other Microsoft 365 Copilot Concerns

Following Microsoft’s March 16 launch of Microsoft 365 Copilot, I wrote an article cautioning people to look past the hyperbole and carefully-scripted demonstrations to consider what we don’t know about the technology. In other words, after the glitz fades and the hype subsides, what does Copilot mean in practical terms?

Microsoft says that Copilot is a “sophisticated processing and orchestration engine” that brings together user data stored in Microsoft 365, the Graph, and GPT-4 large language models. The demonstrations were stunning and highlighted what technology can do in controlled circumstances. What’s clear is that:

- Microsoft will incorporate Copilot options in Office applications like OWA and Teams.

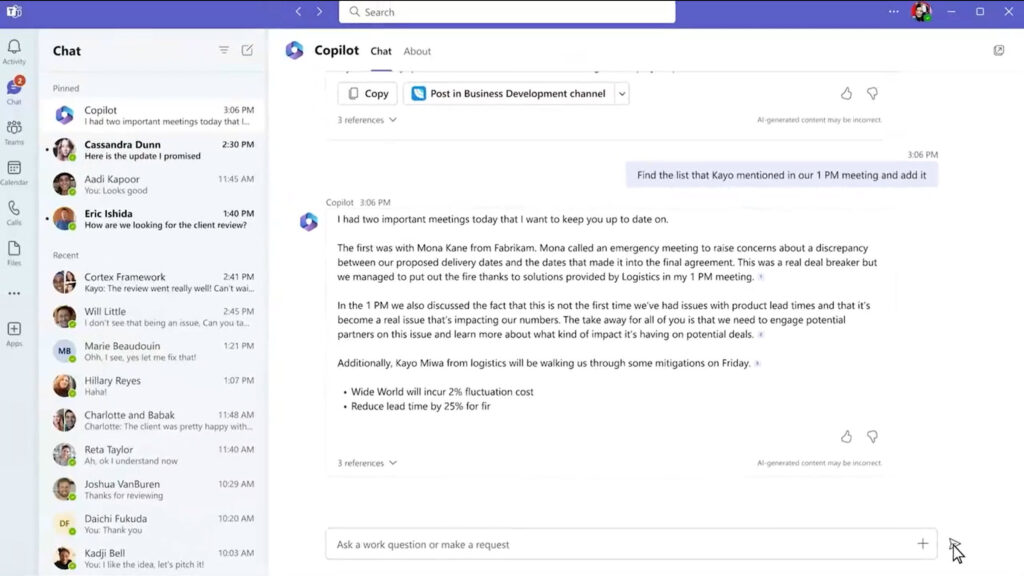

- Copilot options will differ across apps and take account of the way different apps work and the different sets of information available to the processing engine. For instance, Copilot will help people deal with a busy inbox in OWA and refine their writing in Word. These tasks require access to the user’s mailbox and a document. On the other hand, generating a recap of what happened in a Teams meeting might need access to the meeting chat and transcript, as in the example shown in Figure 1. As you can see, this implementation of Copilot in Teams looks like having a chat with any other bot, so it’s pretty natural (details of the implementation might change).

- Copilot uses Graph API requests to leverage the information people store in SharePoint Online, OneDrive for Business, Teams, and Exchange Online.

- The AI reacts to prompts from users together with data gathered from the Graph to respond with actions and/or text. Users can engage with Copilot to refine and enhance the initial response to a point where the user deems it acceptable.

That’s all goodness, but only if your information is stored in Microsoft 365 and you use the latest version of the Microsoft 365 subscription apps. Given the dependency on the Graph to find information to help guide Copilot and the AI, it seems like those who store their documents on PCs, file servers, third-party storage, have their mailboxes on-premises, or use a perpetual version of Office will be out of luck.

The Microsoft 365 Kill Chain and Attack Path Management

An effective cybersecurity strategy requires a clear and comprehensive understanding of how attacks unfold. Read this whitepaper to get the expert insight you need to defend your organization!

Testing Copilot

We don’t know yet if my assertions are true. They are among the questions Microsoft cannot answer yet because they don’t have production-quality software that supports the many languages Office and Microsoft 365 are available in. Microsoft says that they plan to test Copilot with 20 customers before making the software more widely available.

Running a limited test is a sensible approach because there’s no doubt that people can do weird and wonderful things with documents and messages that perhaps even the Graph/GPT-4 combination will struggle to make sense of. For instance, asking Copilot to “create a project report about Contoso” might struggle if a Graph query can’t find relevant documents to use as a base or returns hundreds of documents that seem reasonably alike. Then again, a human would struggle to sort through that mess too.

Hopefully, the test can identify the more egregious examples of user behavior that might lead to AI hallucination, and Microsoft can develop strategies to ameliorate and smoothen Copilot’s activity. Making Copilot as accurate as possible seems to be a sine qua non for general availability.

Copilot Operations

I hope that the test also identifies operational issues like how to disable Copilot for all or some users. We’ve been down the path of dealing with user concerns when Graph requests surface documents in apps like Delve. Most of the problems tenants experienced with Delve were self-inflicted. Software is very good at doing what it’s told, and Delve duly found documents users had access to that they never knew existed. In many situations where tenants complained about Delve security breaches, the underlying reason was the SharePoint site permissions assigned by the very same tenant.

It’s reasonable to assume that users will approach Copilot with some trepidation. After all, who wants AI to take control? Some might even feel that the AI might be better at their job than they are. This is illogical (as Mr. Spock might say) because the human mind will always be more inventive than Copilot, even if Copilot knows how to generate a pivot table in Excel or a snazzy PowerPoint presentation.

Microsoft has ways to disable item insights and people insights to block apps from showing users Graph-based recommendations for “documents they might be interested in” or organization details. It’s predictable that organizations (or maybe their users, represented in some countries by trade unions or workers’ councils) will have similar privacy concerns about Copilot. Hence, a similar way to control how Copilot can interact with the Graph is necessary.

Compliance is another hot area. For instance, should Microsoft 365 apps generate a special audit record when someone uses Copilot to create some content? Should the audit record capture details of the information Copilot extracted from the Graph and used in its interaction with the large language model? At this point, it’s hard to say because we don’t know how Copilot really works and what compliance controls are necessary.

Finally, it’s not clear what weighting Copilot assigns to individual documents and if an organization can mark documents as being the definitive source of truth on a topic so that Copilot will select text from those documents rather than from others located by its search. Alternatively, organizations might want to block some sites from Copilot to avoid the chance that some sensitive information isn’t selected by chance and included in a document.

Copilot Ethics

The ethics of using Copilot to create content need to be tested. I’m not worried about Copilot generating content based on text that the Graph finds in documents available to a user. After all, that’s the same as someone copying and pasting text from one document to another, a practice that’s existed since cut and paste became a thing.

I’m more concerned about the HR performance aspect, where an employee depends too heavily on Copilot to do their work. No one will complain when someone uses Copilot to generate an early draft of a document that they then revise, expand, and enhance. But what about an employee that uses Copilot to answer emails or generate documents and spreadsheets and doesn’t add any value to the AI content? Is it acceptable for them to delegate their responsibilities in such a manner? Similarly, how will fellow employees feel about someone who obviously uses Copilot to generate a lot of their output, especially if that person is favored by management?

I don’t imagine that shirking work in such a way will be common. But it’s not unknown for people to seek an easy life. Direct management and coaching coupled with user education covering effective and appropriate use of Copilot will be important in achieving balance between human creativity and AI-powered enhancement. It would be a sad world if people get into a habit of recycling content through Copilot and never bother engaging their brains to generate anything original.

The Lock-in Factor

Microsoft very much wants Microsoft 365 customers to remain loyal. That’s business – no one likes to lose a paying customer. But what some fear is that Microsoft 365 is increasingly becoming a pit that they can not escape from should they wish to move to another platform.

Three kinds of data exist inside Microsoft 365:

- Source data like messages and documents. This information is relatively easy to export and move to another platform for applications like Exchange Online and SharePoint Online. Things are more complicated in applications like Teams, where data is co-mingled (think of a Loop component in a Teams chat) or Planner (no API is available).

- Processed data like the digital twins stored in the Microsoft 365 substrate to make it easier for common services like Microsoft Search to run. There’s no need to export this data because it’s used exclusively for internal processing. Compliance records (an example of digital twins) generated by Teams, Yammer (Viva Engage), and Planner are available for eDiscovery.

- Derived data generated by AI processing. This includes the much smaller models used by features like suggested replies in Outlook. Copilot is a huge step forward in this area, and there’s no point in even considering taking the data elsewhere because the data is useless to any other platform. We do not know yet if any artifacts created by Copilot will be available for eDiscovery and, if so, in what format.

If an organization becomes hooked on Copilot, will it ever be able to move to another platform? I guess the answer is “it depends.” You can move the basic information in messages and documents to a new platform, but if that platform doesn’t offer the same kind of processing capabilities as available in Copilot (and integrated into the Office apps), then you’re out of luck. Users who have become accustomed to having Copilot generate the first draft of their documents will have to face the unique terror of not knowing how to start a blank document again.

The subliminal message is to keep everything within Microsoft 365 if you want to use advanced processing like that promised by Copilot.

Lots More Questions About Copilot

Many other questions exist that need answers before the widespread adoption of Copilot happens. Licensing is a fundamental question that will determine how quickly people use Copilot. I expect Microsoft to follow the approach seen with other features by having different service plans and licenses for Copilot. For instance, Office 365 E3 might include Copilot Plan 1 that allows use with the Microsoft 365 apps for enterprise (including Outlook and OWA), while the E5 product gets Copilot Plan 2 to expand coverage to Teams.

This is pure speculation on my part. I hope that Microsoft includes some Copilot capabilities in plans like Office 365 E3 to encourage widespread use, if only to emphasize how useful Copilot is at leveraging all that data that’s stored in Microsoft 365. We’ll know more later this year as Microsoft moves towards a public preview.

Microsoft naturally overstates the benefits of Copilot and the impact it might have on the way we work. That’s marketing. What’s true is that Copilot is a very interesting technology that could affect the way some/most/all Microsoft 365 tenants work. That is, if the organization allows Copilot in the door.

Cybersecurity Risk Management for Active Directory

Discover how to prevent and recover from AD attacks through these Cybersecurity Risk Management Solutions.

Co pilot just told me it couldn’t continue with our conversation and that I had to choose another topic it repeated the statement when I asked why and when I said it was curious that it would not explore the subject of truth liberty and justice further it told me it had reached its limit and told me to choose another topic then censured our conversation creating a summery of the points most useful for my inquiry but omitting my statements and summary… I attempted to save the conversation and select all copy and paste it into the notebook and again into my documents folder as a new document. Only the co pilot summary can be located now. The original conversation and familiarity being established was completely removed. This will most likely be deleted as well… I am one of the many shadow banned censured permanently deleted and blacklisted individuals standing up in this fight again finding bookmark on my face well shame shame on co pilot and its creator… And again sham on me. Just like people cannot handle the truth AI imp!odes

Interesting, but it screwed up my web browsing. Default is Chrome but now any http link opens Edge.

Is this another Microsoft ploy to get everyone to use Edge and Bing even though we don’t want them?

Very possibly…

This is one of the rare treatments of MS 365 Copilot on the net. Thanks! It is well balanced and well written!

Thomas Laudal / Assoc. Prof. UiS Business School, NORWAY

Will Copilot not work at all for users with on-premises mailboxes?

Given that content searches cannot process on-premises mailboxes because they have no access to that content, I can’t see how Microsoft 365 Copilot can use it either, so that might rule out using Copilot with Outlook. It might be possible for owners of on-premises mailboxes to use Copilot with the other apps. We will see in time.

I think you mean ‘Mr. Spock’, instead of ‘Dr. Spock’ 🙂

My company’s internal documents contain all kinds of proprietary and confidential information, so I’m concerned about that data being shared with Microsoft’s LLM. Does Microsoft address this? It seems like it would be a pretty widespread worry, but I don’t see it getting talked about…

The way I read it, the LLM is separate and kept separate from the Graph resources (your document). The LLM is used to refine information extracted from the Graph, but I do not think that any of your documents will be ingested into the LLM. That would be a horrible breech of trust, IMHO.

Curious, how can the LLM refine information from the GRAPH resources, unless it has access to the information?

The way I think things work is this: the data is fetched from the Graph repositories (like SharePoint), grounded locally, and is then transmitted to the LLMs in the local Microsoft 365 data center (where Azure AI services run) and processed there before being returned for further grounding and then returned to the user. The data sent to the LLM is not kept there and is erased after processing.

This is the exact reason why I am looking to move our IT assets away from any Microsoft products.

Between the Telemetry and AI intrusion into data combined with a complete lack of transparency from Microsoft regarding this issue makes the regulatory and legal compliance risks are simply too high. Add in the additional issues Tony brings up in this article and it is a nightmare waiting to happen.

If you cannot trust the OS endpoint behaviour the entire security chain of trust is broken and embedded AI that scans internal datastores then potentially sends telemetry data back to Microsoft and 3rd parties breaks that trust.

Me To! MS has proven to be an unreliable company when it gets to privacy. Just check your PC after booting. There are soooo many services running, all in the name of functionality, that is scarry! Not to mention their insane drive to change everything just …because. Users have problems to cope with such a rate of changes with no obvious benefit for them. Adding more features doesn’t make a product necessary better, BUT it make’s it less secure (read: prone to bugs and errors) for sure!

Hi Tony, is co-pilot available for testing in Developer instances?

Not to my knowledge.