After deciding which data to ingest into Sentinel, to the next step is to start the ingestion process. Ingesting networking data can happen in a few different ways, and it is important to choose an architecture that best fits your requirements. This article describes the different ingestion methods, how to choose the best method, and advises how to filter the ingested data.

Ingestion Methods

The available data connector depends on the type of data being ingested. It is up to the vendor of the product to decide what capabilities they provide.

Typically, networking data is available in Syslog or CEF format, but some vendors also provide APIs to ingest the data. Syslog stands for ‘system logging protocol’ and is a standardized protocol for outputting logs. Syslog is not formatted, meaning there are no predefined column names for each data column. Common Event Format (CEF) builds further on Syslog, adding normalization on top of the data.

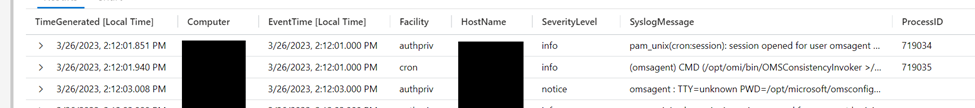

The images below show the differences between Syslog and CEF messages. Figure 1 shows syslog messages. The bulk of the data is stored in the ‘SyslogMessage’ column. To get data into columns, the data must be extracted using KQL queries. Microsoft’s templates often include the necessary commands.

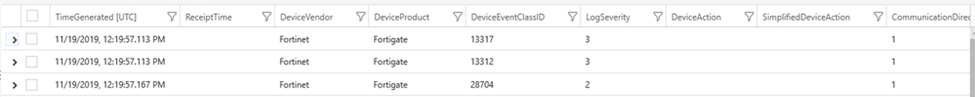

Figure 2 is an extract of the CommonSecurityLog table, where CEF logs are stored post-ingestion. There are multiple columns such as DeviceAction or CommunicationDirection. Most of the useful data is inserted into the correct column, meaning it is not necessary to reformat the data with KQL, as with Syslog.

To ingest Syslog or CEF data into Sentinel, the networking products forward their data to a Linux host that needs to be configured. On the Linux machine (the forwarding host), you must install the Azure Monitor Agent which is responsible for sending the data to Sentinel. More details are available on Microsoft Learn about the installation and configuration.

Another ingestion method is LogStash. LogStash is part of the ElasticStack and can receive data from multiple sources, update, filter, and then send the data to its final destination. Microsoft built a plug-in for Logstash to send data to Sentinel. LogStash replaces the Linux forwarder host and Azure Monitor Agent. Some of its advantages include improved filtering, and support for various inputs and outputs such as file-based, API, and many more. The advantages of LogStash are covered in more detail later.

Newer networking products, primarily cloud-based, support API-based integration using either a pull or push system. Both are a good option for ingesting networking data. My experience is that a push system (meaning the firewall pushes data to an automation, like an Azure Function) is better suited to handle large datasets compared to a pull system. A pull system is more prone to losing data if an issue arises as it is more difficult to build a retry system.

While Sentinel’s content hub boasts many templates, there is no need to limit yourself to that list. Just because something isn’t on the list doesn’t mean it is not supported. If the firewalls support Syslog/CEF or an API-based output, it can be ingested into Sentinel. The ingestion type used depends on the architecture of the target environment.

Filtering Possibilities

When ingesting data into Sentinel, there are two filtering options: data Collection Rules and LogStash.

Data Collection Rules are an Azure-native feature and allow you to create a KQL query to dictate what data should be ingested and what to ignore. Filtering data using Data Collection rules is done using transformations. A simple transformation is shown below. This filters out all events that are denied by the appliance.

source | where DeviceAction != ‘Denied’

LogStash supports filtering in its own product and requires the creation of a configuration file to control filtering. The filtering is more complicated, but there are examples available to help.

The advantage of LogStash is that it filters the data before it is sent to Azure. This is different compared to Data Collection Rules as they filter out the data once it arrives in Azure. When dealing with large volumes of data, this can have a big impact on network demand. Using LogStash and filtering earlier in the chain decreases the strain on the connection.

Choose the Correct Ingestion Method

When choosing the correct ingestion method, there are a couple of questions you should ask yourself.

- Syslog or CEF

- CEF is built on top of Syslog but supports a predefined format which makes it much simpler to use KQL to surface data. Whether you can use Syslog or CEF depends on the appliance vendor (firewall, switch…) from which you want to ingest data. If possible, I recommend using CEF.

- Azure Monitor Agent or LogStash

- Once the log format is decided, you should decide how to get the logs into Sentinel. For that, you can use a Linux forwarder with an Azure Monitor Agent or a Linux forwarder with LogStash installed.When making this decision, it is important to think ahead and future-proof the architecture. Think about the logs that might need to be ingested. If the scope is limited to a product that doesn’t require much filtering, the Azure Monitor Agent is sufficient.The advantage of LogStash is that the filtering happens before data is sent over the internet, which can drastically decrease the required bandwidth.

- I typically default to LogStash as it offers a more future-proof solution. LogStash adds some complexity, but it is limited compared to the added value it provides.

Each implementation is different, and it is important to consider each situation individually. As an example, my team recently worked on an engagement where the customer used Palo Alto Panorama. Panorama is Palo Alto’s log collector that centrally stores all logs from all products. This is a big advantage because it means we didn’t need to collect logs from each individual firewall. Panorama is a cloud product with an API integration. For this engagement, logs were collected using an Azure Function which forwarded the data into Sentinel using the API.

Another engagement was without the Panorama component. To collect data, Palo Alto firewalls forwarded the CEF logs to a Logstash appliance.

Within Microsoft’s content hub, they list the data connector. While it is recommended to use the Microsoft data connector as it is supported, it is important to understand its limitations, capabilities, and to check available alternatives. As an example, if a data connector uses Syslog, but the product supports CEF, I do not recommend using that connector. In this case, I use CEF as it offers much more features compared to Syslog.

Getting Started

When considering the ingestion of data from a new product, I recommend checking if Microsoft has a template data connector available in the Content Hub. You can use the connector to verify what technology it uses and what its capabilities are. Based on that research, validate if you need to use a custom connector. If you are just starting out with Sentinel, I recommend sticking with the built-in data connectors to avoid complexity. Only if familiarizing yourself with the product and are aware of all capabilities, should you consider customizing connectors.

Thank you for this article, I have been having difficulty configuring syslog to collect logs from my Cisco Firewall, please can you point me to an article that helps with configuring a syslog to receive data.

Thank you.