Generative AI is everywhere right now; AI applications claim to improve output and save time or effort by generating content and synthesizing information. The proliferation of these tools comes with an increased risk of organizational data leakage. Many generative AI applications use web clients and can be used without authentication. It is important to ensure that when people work with corporate data they use services that are trusted by their company, and do not copy sensitive data to unauthorized applications.

When I say “unauthorized applications,” I do not suggest that the applications are unsafe. “Unauthorized applications” refers to applications that an organization has not approved for company use, including applications from trusted vendors. There are many reasons why an organization may want to prevent employees from using a tool that is widely trusted – for example, because of data regulations for their industry, GDPR readiness, or simply because the organization wants to control which apps employees can use.

This article explores recommendations to secure Microsoft 365 data from unauthorized generative AI applications. Some solutions require a premium or add-on license. For more information, see Microsoft’s licensing guidance: Microsoft 365, Office 365, Enterprise Mobility + Security, and Windows 11 Subscriptions for Enterprises.

Stop End Users Granting Consent to Third-Party Apps

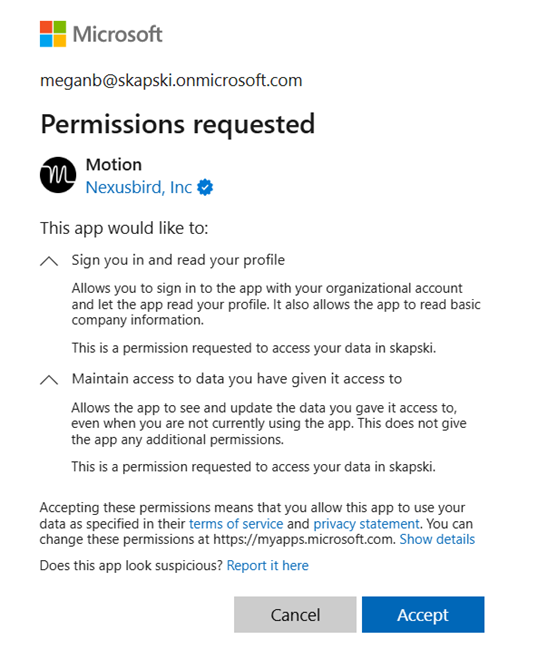

Unless an organization has Security Defaults enabled, by default, users can consent to apps accessing company data on their behalf. That means regular, non-admin users can give a third-party AI solution access to their own data. Figure 1 below shows the end-user experience for granting consent to a third-party app in Microsoft Entra.

If Security Defaults are not enabled for a tenant, consider enabling them, or disabling the default ability to create application registrations or consent to applications. This article explores Security Defaults and if you should use them.

Managing Endpoints and Cloud Applications to Prevent Data Leakage in Generative AI Applications

Microsoft 365 administrators should consider managing devices with Microsoft Intune and using Defender for Endpoint, Defender for Cloud Apps, and Endpoint DLP to audit and/or prevent activity in generative AI applications that has not been vetted by the organization. In addition to the benefits described in this article, Intune-onboarded devices are more easily integrated with these solutions, allowing administrators to onboard devices into Endpoint DLP and enable continuous reporting in Defender for Cloud Apps with one central location for device management without needing to configure a log collector or Secure Web Gateway.

Audit or Block Access to Unauthorized Generative AI Applications

Microsoft Defender for Endpoint and Defender for Cloud Apps work together to audit or block access to generative AI applications without requiring devices to connect to a corporate network, VPN, or jump box to filter network traffic.

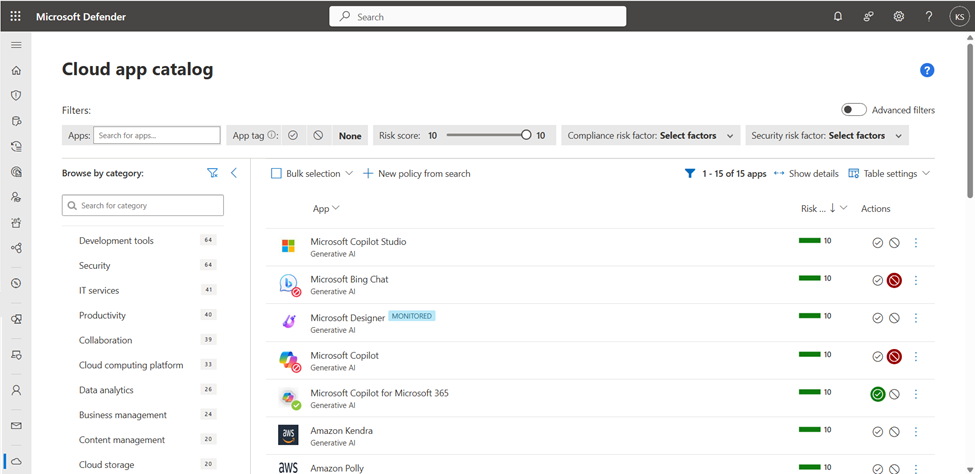

Figure 2 shows the Cloud App Catalog in Defender for Cloud Apps, which lists over 31,000 discoverable cloud apps and rates their enterprise risk level based on regulatory certification, industry standards, and Microsoft’s internal best practices – e.g., the app was recently breached, does not have a trusted certificate, or is missing HTTP security headers. Score metrics can also be customized based on an organization’s specific needs. Apps can be filtered by category, including Generative AI.

Again, organizations may choose to block or monitor apps for reasons completely unrelated to the risk level of the app. In this example, I’ve monitored Microsoft Designer (perhaps to understand how often it is used and who uses it) and blocked Bing Chat and Microsoft Copilot (they’re both “Copilot” now, but are accessed via different URLs). Bing Chat/Microsoft Copilot does not keep data within the EU Data Boundary, whereas Copilot for Microsoft 365 does. For organizations that must abide by GDPR, this is a realistic use case.

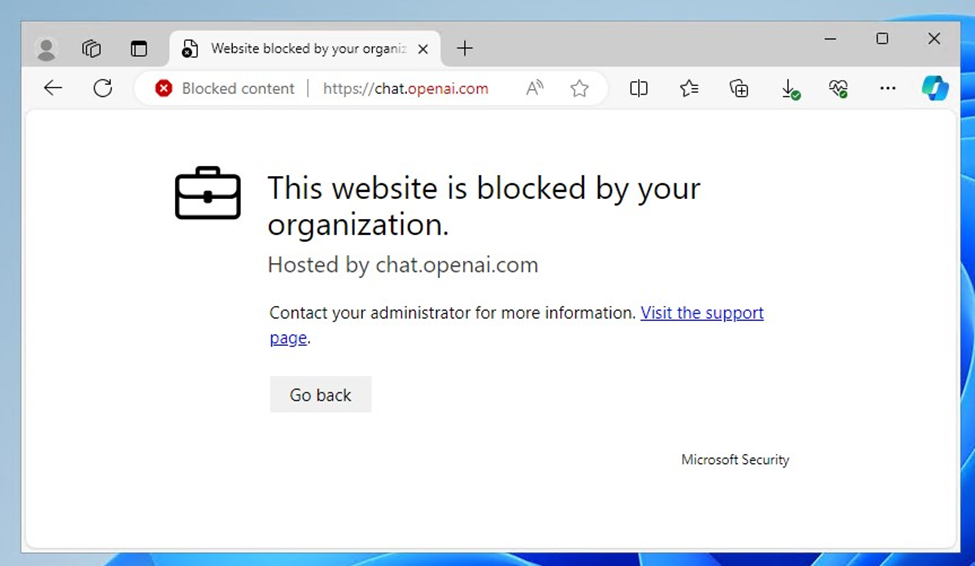

Within the Cloud App Catalog, administrators can tag applications as monitored, sanctioned (allowed) or unsanctioned (blocked). Figure 2 shows a variety of tags. When an application is tagged as unsanctioned, users see the experience shown in Figure 3.

With new cloud applications launching on a regular basis, one might get the sense that blocking unauthorized apps is like playing a game of “Whac-a-Mole”, where as soon as you’ve hammered one into place, another pops up just as quickly. Thankfully, it is not necessary to manually select apps and tag them.

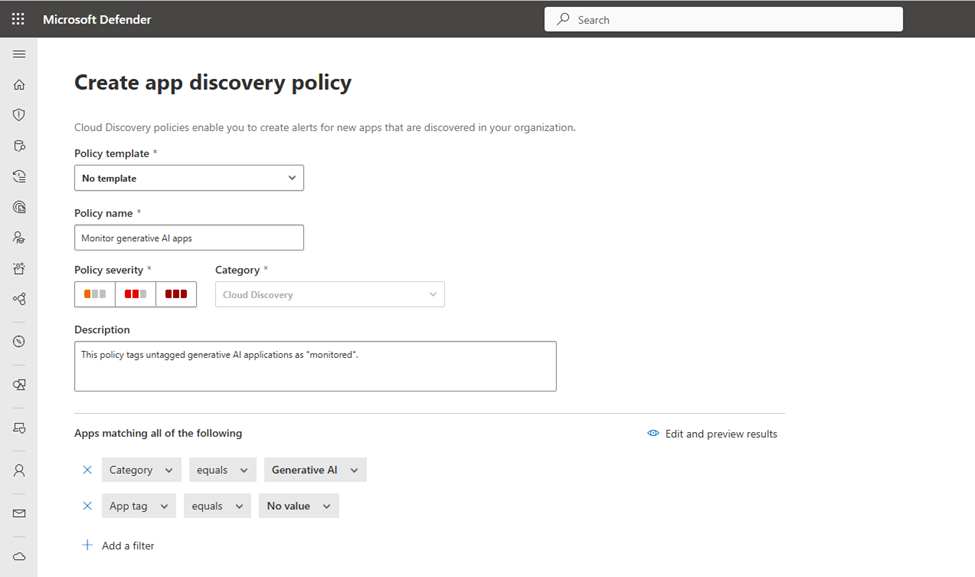

To create a new app discovery policy to automatically discover and tag generative AI apps, open Defender for Cloud Apps > Policy Management (under “Policies”) and choose Create. In the policy, under “Apps matching all of the following”, configure “Category equals Generative AI” and “App tag equals No value” (Figure 4).

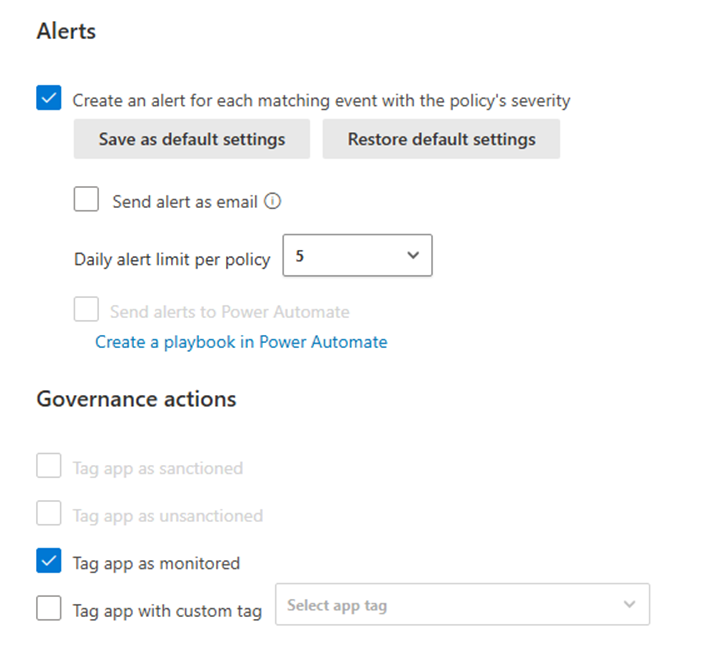

Figure 5 shows that administrators have the option to create alerts for each event matching the policy’s severity and configure a daily alert limit (between 5 and 1000). Alerts for newly discovered generative AI applications can be emailed to administrators or sent to Power Automate to trigger a workflow (for example, to send alerts to an IT ticketing system).

Under “Governance actions”, select “Tag app as monitored” – or “Tag app as unsanctioned” depending on whether you want to automatically monitor or block generative AI apps that have not been manually tagged.

When endpoints are managed by Defender, continuous reports are available through Cloud Discovery. The Cloud Discovery dashboard highlights all newly discovered apps (for the last 90 days). To review newly discovered applications, navigate to the Discovered Apps page. Interestingly, while Discovered Apps has a very similar interface to the Cloud App Catalog, the Generative AI category filter is not available here.

To export all details from the Discovered Apps page, choose Export > Export data. For a report that simply lists the domains of newly discovered applications, choose Export > Export domains.

Block Copying, Pasting, and Uploading Organizational Data

Without governance controls in place, employees can copy and paste or upload company data into web applications. Blocking copying and pasting altogether is counterproductive; the best approach is to selectively block copying and pasting sensitive data.

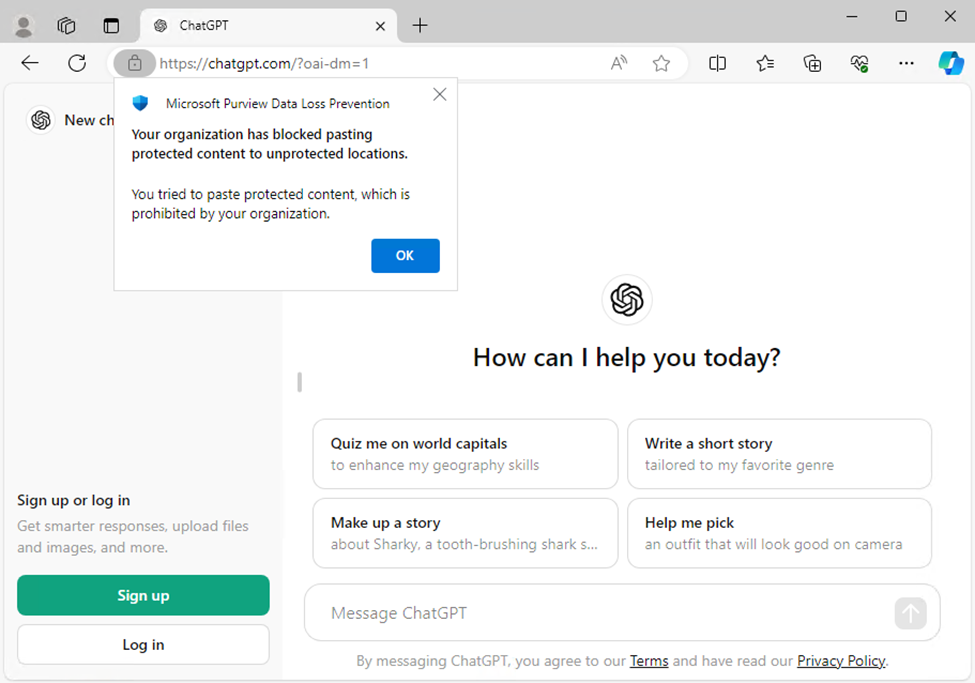

Microsoft Purview DLP combined with Defender for Endpoint audits and prevents the transfer of organizational data to unauthorized locations (e.g., websites, PC and Mac desktop applications, removable storage) without the need for additional software on a device. Purview DLP can restrict sharing to unallowed cloud apps and services., such as unauthorized generative AI apps (Figure 6).

While Microsoft Edge natively supports Purview DLP, Chrome and Firefox require the Purview extension; configure the extension in Intune, and block access to unsupported browsers (such as Brave or Opera) which may be used to circumvent DLP policies.

To install the Purview extension for Chrome or Firefox on Intune-managed Windows devices, create configuration profiles in Intune. The instructions are explained in detail for Chrome and for Firefox in Microsoft’s documentation.

Blocking access to organizational data in unsupported browsers (for example, by using conditional access policies in Entra ID) is not enough to secure your data. Without the Purview extension installed, organizational data can still be pasted into unsupported browsers; blocking the browsers entirely is recommended. On modern Windows devices, this can be achieved through Windows Defender Application Control.

Defender for Cloud Apps Conditional Access App Control is another option for securing cloud data. Session policies block copying and pasting via supported applications. Personally, I believe that Endpoint DLP is a stronger defense than Defender for Cloud Apps Conditional Access App Control; while Defender for Cloud Apps provides governance over data interactions within cloud applications, Endpoint DLP takes a device-centric approach, focusing on the protection of sensitive data stored on endpoints, irrespective of the application in use.

Control Copying Corporate Data to Personal Cloud Services or Removable Storage

To prevent copying corporate data to personal cloud services or removable storage, administrators can implement App Protection Policies in Intune, which allow organizations to control how users share and save data.

Endpoint DLP extends these protections by monitoring and controlling data activity on devices, detecting and blocking unauthorized attempts to copy or transfer sensitive data. App protection policies are primarily focused on securing data within mobile applications, while Endpoint DLP offers control over the movement of sensitive data across all endpoints.

Prevent Screenshots of Sensitive Data

Screenshots can be blocked on mobile devices using device configuration profiles in Intune. Unfortunately, Microsoft doesn’t have a great option for blocking screenshots from desktops. And no one can block screenshots taken by smartphone cameras.

Administrators can block screen captures in desktop operating systems by implementing Purview Information Protection sensitivity labels. With sensitivity labels, documents, sites, and emails can be encrypted either manually or when sensitive content is detected. In the encryption settings for sensitivity labels, administrators can configure usage rights for labeled content. Screenshots are blocked by removing the Copy (or “EXTRACT”) usage right.

I recommend using this approach sparingly if your organization uses or plans to use Copilot for Microsoft 365 with sensitive documents; without the View and Copy usage right, Copilot for Microsoft 365’s functionality is limited.

Also, keep in mind that users can take photos of their screens if they really want to bypass security controls.

Safeguarding Corporate Data in the Age of AI

In the age of AI – specifically, generative AI – it is more important than ever to leverage information security and protection tools to safeguard corporate data. It is up to Microsoft 365 administrators and the organizations they manage to implement policies that best serve them. Be careful not to be overly restrictive to the degree where productivity is hindered.

Hi,

I want to setup pop-up screen on all AI sites, that enables user to read policy guideline acceptance then user able to use AI sites.

I have no idea how you could do this, but have fun trying and do let us know if you succeed.